This week, I hosted another installment of the “Tech Roundup,” for the Federalist Society’s Regulatory Transparency Project. This latest 30-minute episode was on, “Autonomous Vehicles: Where Are We Now?” I was joined by Marc Scribner, a transportation policy expert with the Reason Foundation. We provided an quick update of where federal and state policy for AVs stands as of early 2022 and offered some thoughts about what might happen next in the Biden Administration Department of Transportation (DOT). Some experts believe that the DOT could be ready to start aggressively regulating driverless car tech or AV companies, especially Elon Musk’s Tesla. Tune in to hear what Marc and I have to say about all that and more.

Related Reading:

The race for artificial intelligence (AI) supremacy is on with governments across the globe looking to take the lead in the next great technological revolution. As they did before during the internet era, the US and Europe are once again squaring off with competing policy frameworks.

The race for artificial intelligence (AI) supremacy is on with governments across the globe looking to take the lead in the next great technological revolution. As they did before during the internet era, the US and Europe are once again squaring off with competing policy frameworks.

In early January, the Trump Administration announced a new light-touch regulatory framework and then followed up with a proposed doubling of federal R&D spending on AI and quantum computing. This week, the European Union Commission issued a major policy framework for AI technologies and billed it as “a European approach to excellence and trust.”

It seems the EU basically wants to have its cake and eat it too by marrying up an ambitious industrial policy with a precautionary regulatory regime. We’ve seen this show before. Europe is doubling down on the same policy regime it used for the internet and digital commerce. It did not work out well for the continent then, and there are reasons to think it will backfire on them again for AI technologies. Continue reading →

Originally published on the AIER blog on 9/8/19 as “The Worst Regulation Ever Proposed.”

———-

Imagine a competition to design the most onerous and destructive economic regulation ever conceived. A mandate that would make all other mandates blush with embarrassment for not being burdensome or costly enough. What would that Worst Regulation Ever look like?

Unfortunately, Bill de Blasio has just floated a few proposals that could take first and second place prize in that hypothetical contest. In a new Wired essay, the New York City mayor and 2020 Democratic presidential candidate explains, “Why American Workers Need to Be Protected From Automation,” and aims to accomplish that through a new agency with vast enforcement powers, and a new tax.

Unfortunately, Bill de Blasio has just floated a few proposals that could take first and second place prize in that hypothetical contest. In a new Wired essay, the New York City mayor and 2020 Democratic presidential candidate explains, “Why American Workers Need to Be Protected From Automation,” and aims to accomplish that through a new agency with vast enforcement powers, and a new tax.

Taken together, these ideas represent one of the most radical regulatory plans any America politician has yet concocted.

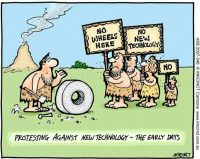

Politicians, academics, and many others have been panicking over automation at least since the days when the Luddites were smashing machines in protest over growing factory mechanization. With the growth of more sophisticated forms of robotics, artificial intelligence, and workplace automation today, there has been a resurgence of these fears and a renewed push for sweeping regulations to throw a wrench in the gears of progress. Mayor de Blasio is looking to outflank his fellow Democratic candidates for president with an anti-automation plan that may be the most extreme proposal of its kind. Continue reading →

Autonomous vehicles are quickly becoming a reality. Waymo just launched a driverless taxi service in Arizona. Part of GM’s cuts were based on a decision to refocus their efforts around autonomous vehicle technology. Tesla seems to repeatedly be promising more and more features that take us closer than ever to a self-driving future. Much of this progress has been supported by the light touch approach that has been taken by both state and federal regulators up to this point. This approach has allowed the technology to rapidly develop, and the potential impact of federal legislation that might detour this progress should be cautiously considered.

For over a year, the Senate has considered passing federal legislation for autonomous vehicle technology, the AV START Act, after similar legislation already passed the House of Representatives. This bill would clarify the appropriate roles for state and federal authorities and preempting some state actions when it comes to regulating autonomous vehicles and will hopefully end some of the patchwork problems that have emerged. While federal legislation regarding preemption may be necessary for autonomous vehicles to truly revolutionize transportation, other parts of the bill could create increased regulatory burdens that actually add speed bumps on the path of this life-saving innovation.

Continue reading →

This week I will be traveling to Montreal to participate in the 2018 G7 Multistakeholder Conference on Artificial Intelligence. This conference follows the G7’s recent Ministerial Meeting on “Preparing for the Jobs of the Future” and will also build upon the G7 Innovation Ministers’ Statement on Artificial Intelligence. The goal of Thursday’s conference is to, “focus on how to enable environments that foster societal trust and the responsible adoption of AI, and build upon a common vision of human-centric AI.” About 150 participants selected by G7 partners are expected to participate, and I was invited to attend as a U.S. expert, which is a great honor.

This week I will be traveling to Montreal to participate in the 2018 G7 Multistakeholder Conference on Artificial Intelligence. This conference follows the G7’s recent Ministerial Meeting on “Preparing for the Jobs of the Future” and will also build upon the G7 Innovation Ministers’ Statement on Artificial Intelligence. The goal of Thursday’s conference is to, “focus on how to enable environments that foster societal trust and the responsible adoption of AI, and build upon a common vision of human-centric AI.” About 150 participants selected by G7 partners are expected to participate, and I was invited to attend as a U.S. expert, which is a great honor.

I look forward to hearing and learning from other experts and policymakers who are attending this week’s conference. I’ve been spending a lot of time thinking about the future of AI policy in recent books, working papers, essays, and debates. My most recent essay concerning a vision for the future of AI policy was co-authored with Andrea O’Sullivan and it appeared as part of a point/counterpoint debate in the latest edition of the Communications of the ACM. The ACM is the Association for Computing Machinery, the world’s largest computing society, which “brings together computing educators, researchers, and professionals to inspire dialogue, share resources, and address the field’s challenges.” The latest edition of the magazine features about a dozen different essays on “Designing Emotionally Sentient Agents” and the future of AI and machine-learning more generally.

In our portion of the debate in the new issue, Andrea and I argue that “Regulators Should Allow the Greatest Space for AI Innovation.” “While AI-enabled technologies can pose some risks that should be taken seriously,” we note, “it is important that public policy not freeze the development of life-enriching innovations in this space based on speculative fears of an uncertain future.” We contrast two different policy worldviews — the precautionary principle versus permissionless innovation — and argue that:

artificial intelligence technologies should largely be governed by a policy regime of permissionless innovation so that humanity can best extract all of the opportunities and benefits they promise. A precautionary approach could, alternatively, rob us of these life-saving benefits and leave us all much worse off.

That’s not to say that AI won’t pose some serious policy challenges for us going forward that deserve serious attention. Rather, we are warning against the dangers of allowing worst-case thinking to be the default position in these discussions. Continue reading →

By Adam Thierer and Jennifer Huddleston Skees

There was horrible news from Tempe, Arizona this week as a pedestrian was struck and killed by a driverless car owned by Uber. This is the first fatality of its type and is drawing widespread media attention as a result. According to both police statements and Uber itself, the investigation into the accident is ongoing and Uber is assisting in the investigation. While this certainly is a tragic event, we cannot let it cost us the life-saving potential of autonomous vehicles.

While any fatal traffic accident involving a driverless car is certainly sad, we can’t ignore the fact that each and every day in the United States letting human beings drive on public roads is proving far more dangerous. This single event has led some critics to wonder why we were allowing driverless cars to be tested on public roads at all before they have been proven to be 100% safe. Driverless cars can help reverse a public health disaster decades in the making, but only if policymakers allow real-world experimentation to continue.

Let’s be more concrete about this: Each day, Americans take 1.1 billion trips driving 11 billion miles in vehicles that weigh on average between 1.5 and 2 tons. Sadly, about 100 people die and over 6,000 are injured each day in car accidents. 94% of these accidents have been shown to be attributable to human error and this deadly trend has been increasing as we become more distracted while driving. Moreover, according to the Center for Disease Control and Prevention, almost 6000 pedestrians were killed in traffic accidents in 2016, which means there was roughly one crash-related pedestrian death every 1.6 hours. In Arizona, the issue is even more pronounced with the state ranked 6th worst for pedestrians and the Phoenix area ranked the 16th worst metro for such accidents nationally. Continue reading →

Autonomous cars have been discussed rather thoroughly recently and at this point it seems a question of when and how rather than if they will become standard. But as this issue starts to settle, new questions about the application of autonomous technology to other types of transportation are becoming ripe for policy debates. While a great deal of attention seems to be focused on the potential revolutionize the trucking and shipping industries, not as much attention has been paid to how automation may help improve both intercity and intracity bus travel or other public and private transit like trains. The recent requests for comment from the Federal Transit Authority show that policymakers are starting to consider these other modes of transit in preparing for their next recommendations for autonomous vehicles. Here are 5 issues that will need to be considered for an autonomous transit system.

Continue reading →

Tesla, Volvo, and Cadillac have all released a vehicle with features that push them beyond the standard level 2 features and nearing a level 3 “self-driving” automation system where the driver is still needs to be there, but the car can do most of the work. While there have been some notable accidents, most of these were tied to driver errors or behavior and not the technology. Still autonomous vehicles hold the promise of potentially reducing traffic accidents by more than 90% if widely adopted. However, fewer accidents and a reduction in the potential for human error in driving could change the function and formulas of the auto insurance market.

Continue reading →

Congress is poised to act on “driverless car” legislation that might help us achieve one of the greatest public health success stories of our lifetime by bringing down the staggering costs associated with car crashes.

The SELF DRIVE Act currently awaiting a vote in the House of Representatives would pre-empt the existing state laws concerning driverless cars and replace these state laws with a federal standard. The law would formalize the existing NHTSA standards for driverless cars and establish their role as the regulator of the design, construction, and performance of this technology. The states would become regulators for driverless cars and its technology in the same way as they are for current driver operated motor vehicles.

It is important we get policy right on this front because motor vehicle accidents result in over 35,000 deaths and over 2 million injuries each year. These numbers continue to rise as more people hit the roads due to lower gas prices and as more distractions while driving emerge. The National Highway Traffic Safety Administration (NHTSA) estimates 94 percent of these crashes are caused by driver error.

Driverless cars provide a potential solution to this tragedy. One study estimated that widespread adoption of such technology would avoid about 28 percent of all motor vehicle accidents and prevent nearly 10,000 deaths each year. This lifesaving technology may be generally available sooner than expected if innovators are allowed to freely develop it.

Continue reading →

This week I will be traveling to Montreal to participate in the

This week I will be traveling to Montreal to participate in the  The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.