[Cross-posted from Medium.]

Over the past decade, tech policy experts have debated the impact of “software eating the world.” In coming years, the debate will turn to what happens when that software can be described as artificial intelligence (AI) and comes to have an even greater influence. Every segment of the economy will be touched by AI, machine learning (ML), robotics, and the Computational Revolution more generally. And public policy will be radically transformed along the way.

This process is already well underway and accelerating every day. AI, ML, and advanced robotics technologies are rapidly transforming a diverse array of sectors and professions such as medicine and health care, financial services, transportation, retail, agriculture, entertainment, energy, aviation, the automotive industry, and many others.

As every industry integrates AI, all policy will involve AI and computational considerations. The stakes here are profound for individuals, economies, and nations. It is not hyperbole when we hear how AI will drive “the biggest geopolitical revolution in human history” and that “AI governance will become among the most important global issue areas.”

Unfortunately, many policy wonks — especially liberty-loving, pro-innovation policy analysts — are unaware of or ignoring these realities. They are not focusing on preparing for the staggering constellation of issues and controversies that await us in the world of AI policy.

Innovation defenders need to keep the Gretzky principle in mind. Hockey legend Wayne Gretzky once famously noted that the key to his success was, “I skate to where the puck is going to be, not where it has been.” Yet today, many analysts and organizations are focused on the where the tech policy puck is now and not anticipating where it will be tomorrow.

Because of its breadth, AI policy will be the most important technology policy fight of the next quarter century. As proposals to regulate AI proliferate, those who are passionate about preserving the freedom to innovate must prepare to meet the challenge. Here’s what we need to do to prepare for it. Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

Running List of My Research on AI, ML & Robotics Policy

by Adam Thierer on July 29, 2022 · 0 comments

[last updated 5/2/2024]

This a running list of all the essays and reports I’ve already rolled out on the governance of artificial intelligence (AI), machine learning (ML), and robotics. Why have I decided to spend so much time on this issue? Because this will become the most important technological revolution of our lifetimes. Every segment of the economy will be touched in some fashion by AI, ML, robotics, and the power of computational science. It should be equally clear that public policy will be radically transformed along the way.

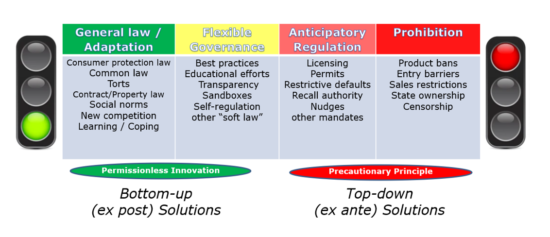

Eventually, all policy will involve AI policy and computational considerations. As AI “eats the world,” it eats the world of public policy along with it. The stakes here are profound for individuals, economies, and nations. As a result, AI policy will be the most important technology policy fight of the next decade, and perhaps next quarter century. Those who are passionate about the freedom to innovate need to prepare to meet the challenge as proposals to regulate AI proliferate.

There are many socio-technical concerns surrounding algorithmic systems that deserve serious consideration and appropriate governance steps to ensure that these systems are beneficial to society. However, there is an equally compelling public interest in ensuring that AI innovations are developed and made widely available to help improve human well-being across many dimensions. And that’s the case that I’ll be dedicating my life to making in coming years.

Here’s the list of what I’ve done so far. I will continue to update this as new material is released: Continue reading →