This week I will be traveling to Montreal to participate in the 2018 G7 Multistakeholder Conference on Artificial Intelligence. This conference follows the G7’s recent Ministerial Meeting on “Preparing for the Jobs of the Future” and will also build upon the G7 Innovation Ministers’ Statement on Artificial Intelligence. The goal of Thursday’s conference is to, “focus on how to enable environments that foster societal trust and the responsible adoption of AI, and build upon a common vision of human-centric AI.” About 150 participants selected by G7 partners are expected to participate, and I was invited to attend as a U.S. expert, which is a great honor.

This week I will be traveling to Montreal to participate in the 2018 G7 Multistakeholder Conference on Artificial Intelligence. This conference follows the G7’s recent Ministerial Meeting on “Preparing for the Jobs of the Future” and will also build upon the G7 Innovation Ministers’ Statement on Artificial Intelligence. The goal of Thursday’s conference is to, “focus on how to enable environments that foster societal trust and the responsible adoption of AI, and build upon a common vision of human-centric AI.” About 150 participants selected by G7 partners are expected to participate, and I was invited to attend as a U.S. expert, which is a great honor.

I look forward to hearing and learning from other experts and policymakers who are attending this week’s conference. I’ve been spending a lot of time thinking about the future of AI policy in recent books, working papers, essays, and debates. My most recent essay concerning a vision for the future of AI policy was co-authored with Andrea O’Sullivan and it appeared as part of a point/counterpoint debate in the latest edition of the Communications of the ACM. The ACM is the Association for Computing Machinery, the world’s largest computing society, which “brings together computing educators, researchers, and professionals to inspire dialogue, share resources, and address the field’s challenges.” The latest edition of the magazine features about a dozen different essays on “Designing Emotionally Sentient Agents” and the future of AI and machine-learning more generally.

In our portion of the debate in the new issue, Andrea and I argue that “Regulators Should Allow the Greatest Space for AI Innovation.” “While AI-enabled technologies can pose some risks that should be taken seriously,” we note, “it is important that public policy not freeze the development of life-enriching innovations in this space based on speculative fears of an uncertain future.” We contrast two different policy worldviews — the precautionary principle versus permissionless innovation — and argue that:

artificial intelligence technologies should largely be governed by a policy regime of permissionless innovation so that humanity can best extract all of the opportunities and benefits they promise. A precautionary approach could, alternatively, rob us of these life-saving benefits and leave us all much worse off.

That’s not to say that AI won’t pose some serious policy challenges for us going forward that deserve serious attention. Rather, we are warning against the dangers of allowing worst-case thinking to be the default position in these discussions.

But what about some of the policy concerns regarding AI, including privacy, “algorithmic accountability,” or more traditional fears about automation leading to job displacement or industrial disruption. Some of the these issues deserve greater scrutiny, but as Andrea and I pointed out in a much longer paper with Raymond Russell, there often exists better ways of dealing with such issues before resorting to preemptive, top-down controls on fast-moving, hard-to-predict technologies.

“Soft law” options will often serve us better than old hard law approaches. Soft law mechanisms, as I write in my latest law review article with Jennifer Skees and Ryan Hagemann, are a useful way to bring diverse parties together to address pressing policy concerns without destroying the innovative promise of important new technologies. Among other things, soft law includes multistakeholder processes and ongoing efforts to craft flexible “best practices.” It can also include important collaborative efforts such as this recent IEEE “Global Initiative on Ethics of Autonomous and Intelligent Systems,” which serves as “an incubation space for new standards and solutions, certifications and codes of conduct, and consensus building for ethical implementation of intelligent technologies.” This approach brings together diverse voices from across the globe to develop rough consensus on what “ethically-aligned design” looks like for AI and aims to establish a framework and set of best practices for the development of these technologies over time.

Others have developed similar frameworks, including the ACM itself. The ACM developed a Code of Ethics and Professional Conduct in the early 1970s and then refined it in the early 1990s and then again just recently in 2018. Each iteration of the ACM Code reflected ongoing technological developments from the mainframe era to the PC and Internet revolution and on through today’s machine-learning and AI era. The latest version of the Code “affirms an obligation of computing professionals, both individually and collectively, to use their skills for the benefit of society, its members, and the environment surrounding them,” and insists that computing professionals “should consider whether the results of their efforts will respect diversity, will be used in socially responsible ways, will meet social needs, and will be broadly accessible.” The document also stresses how, “[a]n essential aim of computing professionals is to minimize negative consequences of computing, including threats to health, safety, personal security, and privacy. When the interests of multiple groups conflict, the needs of those less advantaged should be given increased attention and priority.”

Of course, over time, more targeted or applied best practices and codes of conduct will be formulated as new technological developments make them necessary. It is impossible to perfectly anticipate and plan for all the challenges that we may face down the line. But we can establish some rough best practices and ethical guidelines to help us deal with some of them. As we do so, we need to think hard about how to craft those principles and policies in such a way so as to not undermine the potentially amazing, life-enriching — and potentially even life-saving — benefits that AI technologies could bring about.

You can hear more about these and other issues surrounding the future of AI in this 6-minute video that Communications of the ACM put together to coincide with my debate with Oren Etzioni of the Allen Institute for Artificial Intelligence. As you will probably notice, there’s actually a lot more common ground between us in this discussion that you might initially suspect. For example, we agree that it would be a serious mistake to regulate AI at the general-purpose level and that it instead makes more sense to zero-in on specific AI applications to determine where policy interventions might be needed.

Of course, things get more contentious when we consider what kind of policy interventions we might want for specific AI applications, and also the much more challenging question about how to define and measure “harm” in this context. And this all assumes we can even come to some general consensus about how to first define what we even mean by “artificial intelligence” or “robotics” in general. That’s harder than many realize and it is important because it has a bearing on the overall scope and practicality of regulation in various contexts.

Another thing that seems to be the source of serious ongoing debate between people in this field concerns the wisdom of creating an entirely new agency or centralized authority of some sort to oversee or guide the development AI or robotics. I’ve debated that question many times with Ryan Calo, who first pitched the idea a few years back in a working paper for Brookings. In response, I noted that we already have quite a few “robot regulators” in existence today in the form of technocratic agencies that oversee the specific development of various types of robotic and AI-oriented applications. For example, NHTSA already oversees driverless cars, FAA regulates drones, and the FDA handles AI-based medical devices and applications. Will adding another big, over-arching Robotics Commission really add much value to the process? Or will it simply add another bureaucratic layer of red tape to the process of getting life-enriching services out to the public? I doubt, for example, that the Digital Revolution would have been somehow improved much had America created a Federal Computer Commission or Federal Internet Commission 25 years ago.

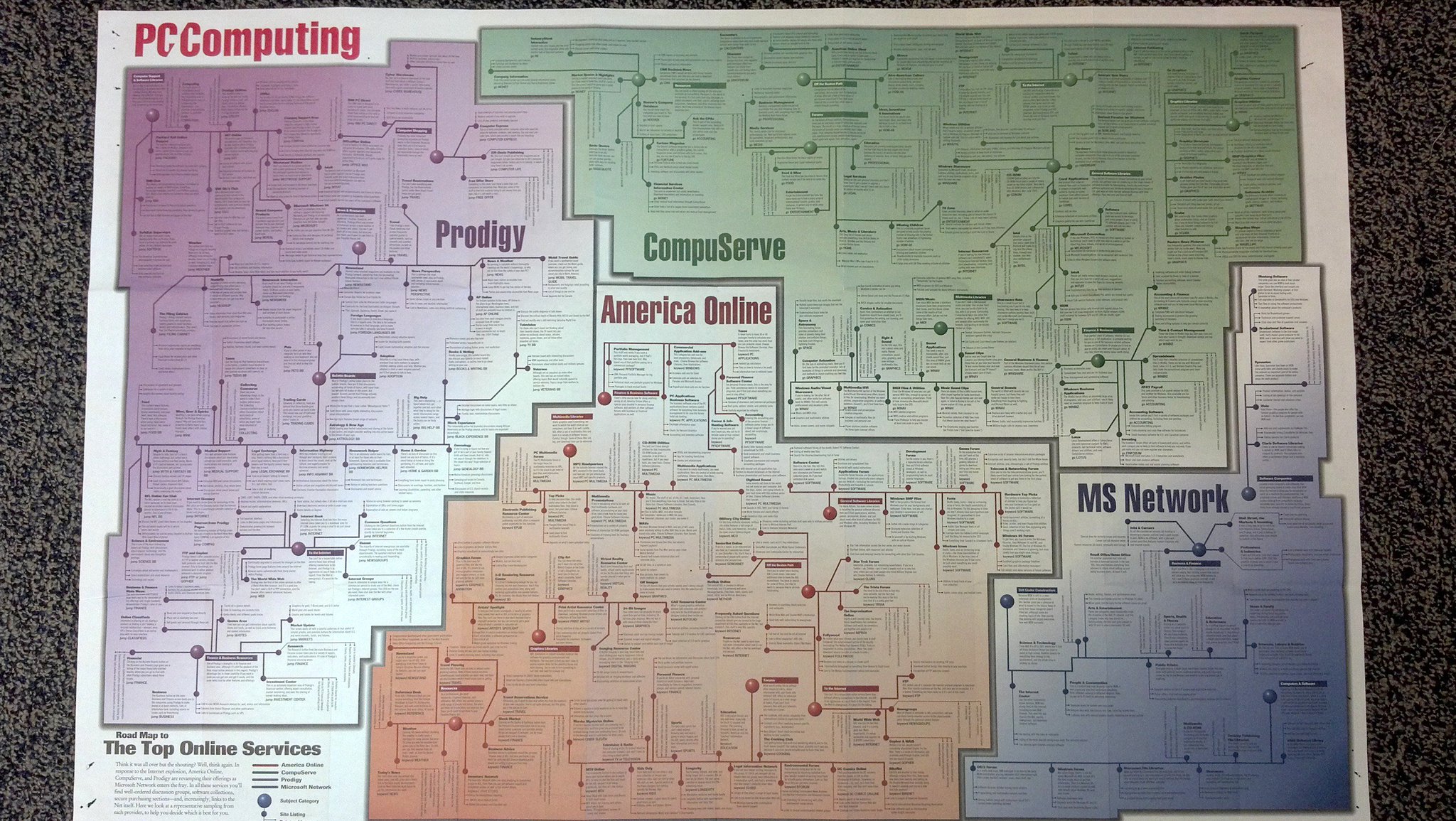

Moreover, had we adopted such entities, I worry about how the tech companies of an earlier generation might have utilized that process to keep new players and technologies from emerging. As I noted this week in a tweet that got a lot of attention, I used to have the adjoining poster from PC Computing magazine on my office wall over 20 years ago. It was entitled, “Roadmap to Top Online Services,” and showed how the powerful Big 4 online service providers — America Online, Prodigy, Compuserve, and Microsoft — were spreading their tentacles. People used to see this poster on my wall and ask me whether there was any hope of disrupting the perceived choke-hold that these companies had on the market at the time.

Of course, we now look back and laugh at the idea that these firms could have bottled up innovation and kept competition at bay. But ask yourself: When disruptive innovations appeared on the scene, what would those incumbent firms have done if they had regulators to run to for help down at a Federal Computer Commission or Federal Internet Commission? I think we know exactly what they would have done because the lamentable history of so much Federal Communication Commission regulation shows us that the powerful will grab for the levers of power wherever they exist. Some critics don’t accept the idea that “rent-seeking” and regulatory capture are real problems, or they believe that we can find creative ways to avoid those problems. But history shows this has been a reoccurring problem in countless sectors and one that we should try to avoid as much as possible by not establishing mechanisms that could exclude beneficial forms of competition and innovation from coming about to begin with.

That could certainly happen right now with the regulatory mechanisms already in place. For example, just this week, Jennifer Huddleston Skees and I wrote about the dangers of “Emerging Tech Export Controls Run Amok,” as the Trump Administration ponders a potentially massive expansion of export restrictions on a wide variety of technologies. More than a dozen different AI or autonomous system technologies appear on the list for consideration. That could pose real trouble not just for commercial innovators in this space, but also for non-commercial research and collaborative open source efforts involving these technologies.

Again, that doesn’t mean AI and robotics should develop in a complete policy vacuum. We need “governance” but we don’t need the sort of heavy-handed, top-down, competition-killing, innovation-restricting sort of regulatory regimes of the past. I continue to believe that more flexible, adaptive “soft law” mechanisms provide the reasonable path forward for most of the concerns we hear about AI and robotics today. These are challenging issues, however, and I look forward to learning more from other experts in the field when I visit Montreal for this week’s G7 discussion.

_____________________________________

Additional Reading:

- “Should the Government Regulate Artificial Intelligence?” Wall Street Journal debate between Ryan Calo, Julia Powles, and Adam Thierer, April 29, 2018.

- Adam Thierer, Andrea O’Sullivan & Raymond Russell, “Artificial Intelligence and Public Policy,” Research Paper, Mercatus Center at George Mason University, August 23, 2017.

- Adam Thierer, “Which Emerging Technologies are “Weapons of Mass Destruction”? Technology Liberation Front, August 26, 2016.

- Adam Thierer, ““Wendell Wallach on the Challenge of Engineering Better Technology Ethics“

- Adam Thierer, “Problems with Precautionary Principle-Minded Tech Regulation & a Federal Robotics Commission,” Technology Liberation Front, September 22, 2014.

- Adam Thierer, “Are ‘Permissionless Innovation’ and ‘Responsible Innovation’ Compatible?” Technology Liberation Front, July 12, 2017.

- Adam Thierer & Jennifer Huddleston Skees, “Emerging Tech Export Controls Run Amok,” Technology Liberation Front, November 28, 2018.

- Adam Thierer, Permissionless Innovation: The Continuing Case for Comprehensive Technological Freedom

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.