Articles by Adam Thierer

Senior Fellow in Technology & Innovation at the R Street Institute in Washington, DC. Formerly a senior research fellow at the Mercatus Center at George Mason University, President of the Progress & Freedom Foundation, Director of Telecommunications Studies at the Cato Institute, and a Fellow in Economic Policy at the Heritage Foundation.

Senior Fellow in Technology & Innovation at the R Street Institute in Washington, DC. Formerly a senior research fellow at the Mercatus Center at George Mason University, President of the Progress & Freedom Foundation, Director of Telecommunications Studies at the Cato Institute, and a Fellow in Economic Policy at the Heritage Foundation.

It was my pleasure today to debate the future of public media funding on Warren Olney’s NPR program, “To The Point“. I was 1 of 5 guests and I wasn’t brought into the show until about 29 minutes into the program, but I tried to reiterate some of the key points I made in my essay last week on “‘Non-Commercial Media’ = Fine; ‘Public Media’ = Not So Much.” I won’t reiterate everything I said before since you can just go back and read it, but to briefly summarize what I said there as well as on today’s show: (1) taxpayers shouldn’t be forced to subsidize speech or media content they find potentially objectionable; and (2) public broadcasters are currently perfectly positioned to turn this federal funding “crisis” into a golden opportunity by asking its well-heeled and highly-diversified base of supporters to step up to the plate and fill the gap left by the end of taxpayer subsidies.

Just a word more on that last point. As I pointed out on the show today, it’s an uncomfortable fact of life for NPR that their average listener is old, rich, highly-educated, and mostly white. Specifically, here are some numbers that NPR itself has compiled about its audience demographics:

- The median age of the NPR listener is 50.

- The median household income of an NPR News listener is about $86,000, compared to the national average of about $55,000.

- NPR’s audience is extraordinarily well-educated. Nearly 65% of all listeners have a bachelor’s

degree, compared to only a quarter of the U.S. population. Also, they are three times more likely than the

average American to have completed graduate school.

- The majority of the NPR audience (86%) identifies itself as white.

Why do these numbers matter? Simply stated: These people can certainly step up to the plate and pay more to cover the estimated $1.39 that taxpayers currently contribute to the public media in the U.S. But wait, there’s more! Continue reading →

The folks at Reason magazine were kind enough to invite me to submit a review of Tim Wu’s new book, The Master Switch: The Rise and Fall of Information Empires based on my 6-part series on the book that I posted here on the TLF late last year. (Parts 1, 2, 3, 4, 5, 6) My new essay, which is entitled “The Rise of Cybercollectivism,” has now been posted on the Reason website.

I realize that title will give some readers heartburn, even those who are inclined to agree with me much the time. After all, “collectivism” is a term that packs some rhetorical punch and leads to quick accusations of red-baiting. I addressed that concern in a Cato Unbound debate with Lawrence Lessig a couple of years ago after he strenuously objected to my use of that term to describe his worldview (and that of Tim Wu, Jonathan Zittrain, and their many colleagues and followers). As I noted then, however, the “collectivism” of which I speak is a more generic type, not the hard-edged Marxist brand of collectivism of modern times. For example, I do not believe that Professors Lessig, Zittrain, or Wu are out to socialize all the information means of production and send us all to digital gulags or anything silly like that. Rather, their “collectivism” is rooted in a more general desire to have–as Declan McCullagh eloquently stated in a critique of Lessig’s Code–rule by “technocratic philosopher kings.” Here’s a passage from my Reason review of Wu’s Master Switch in which I expand upon that notion:

Continue reading →

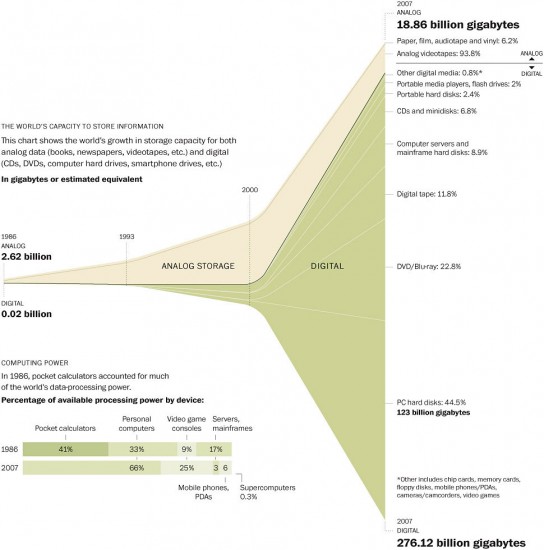

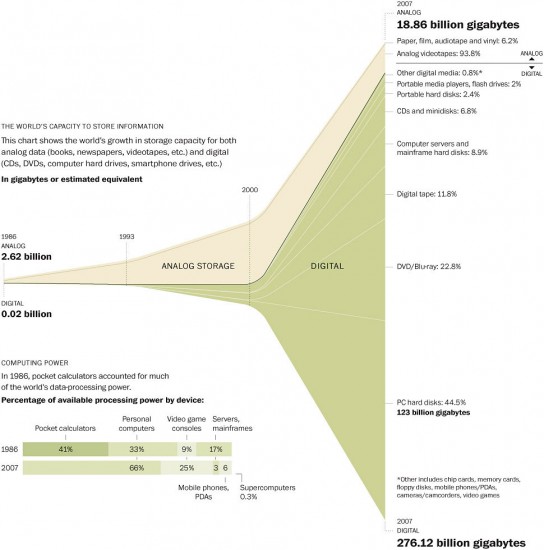

Here’s an amazing graphic that appeared in today’s Washington Post depicting how digital information has grown exploded over the past two decades. It’s better viewed on a large monitor from this link on the Post website. And here’s the accompanying Post article. The underlying data come from a new study by Martin Hilbert and Priscila Lopez of the University of Southern California, which is entitled, “The World’s Technological Capacity to Store, Communicate, and Compute Information.” It appears in the latest issue of Science but is not available without a subscription.

There’s a sharp piece in today’s Washington Post from Jack Goldsmith, currently with Harvard Law but formerly an assistant attorney general in the Bush administration, about “Why the U.S. Shouldn’t Try Julian Assange.” Goldsmith points to the sticky First Amendment / press freedom issues at stake should the U.S. try to go after Assange and WikiLeaks:

A conviction would also cause collateral damage to American media freedoms. It is difficult to distinguish Assange or WikiLeaks from The Washington Post. National security reporters for The Post solicit and receive classified information regularly. And The Post regularly publishes it. The Obama administration has suggested it can prosecute Assange without impinging on press freedoms by charging him not with publishing classified information but with conspiring with Bradley Manning, the alleged government leaker, to steal and share the information. News reports suggest that this theory is falling apart because the government cannot find evidence that Assange induced Bradley to leak. Even if it could, such evidence would not distinguish the many American journalists who actively aid leakers of classified information.

One reason journalists have never been prosecuted for soliciting and publishing classified information is that the First Amendment, to an uncertain degree never settled by courts, protects these activities. Convicting Assange would require courts to resolve this uncertainty in a way that narrows First Amendment protections. It would imply that the First Amendment does not prevent prosecution of American journalists who seek and publish classified information. At the very least it would render the First Amendment a less certain shield. This would – in contrast to WikiLeaks copycats outside our borders – chill the American press in its national security reporting.

Quite right, and it’s a point bolstered by another editorial that also appeared in the Post a few weeks ago by Adam Penenberg of New York University, in which he made the case for treating Assange as a journalist. Penenberg asks: “What constitutes “legitimate newsgathering activities”? How do you differentiate between what WikiLeaks does and what the New York Times does?”

Continue reading →

Congrats are due to Tim Wu, who’s just been appointed as a senior advisor to the Federal Trade Commission (FTC). Tim is a brilliant and gracious guy; easily one of the most agreeable people I’ve ever had the pleasure of interacting with in my 20 years in covering technology policy. He’s a logical choice for such a position in a Democratic administration since he has been one of the leading lights on the Left on cyberlaw issues over the past decade.

That being said, Tim’s ideas on tech policy trouble me deeply. I’ll ignore the fact that he gave birth to the term “net neutrality” and that he chaired the radical regulatory activist group, Free Press. Instead, I just want to remind folks of one very troubling recommendation for the information sector that he articulated in his new book, The Master Switch: The Rise and Fall of Information Empires. While his book was preoccupied with corporate power and the ability of media and communications companies to posses a supposed “master switch” over speech or culture, I’m more worried about the “regulatory switch” that Tim has said the government should toss.

Tim has suggested that a so-called “Separations Principle” govern our modern information economy. “A Separations Principle would mean the creation of a salutary distance between each of the major functions or layers in the information economy,” he says. “It would mean that those who develop information, those who control the network infrastructure on which it travels, and those who control the tools or venues of access must be kept apart from one another.” Tim calls this a “constitutional approach” because he models it on the separations of power found in the U.S. Constitution.

I critiqued this concept in Part 6 of my ridiculously long multi-part review of his new book, and I discuss it further in a new Reason magazine article, which is due out shortly. As I note in my Reason essay, Tim’s blueprint for “reforming” technology policy represents an audacious industrial policy for the Internet and America’s information sectors. Continue reading →

I’m not one of those libertarians who incessantly rants about the supposed evils of National Public Radio (NPR) and the Public Broadcast Service (PBS). In fact, I find quite a bit to like in the programming I consume on both services, NPR in particular. A few years back I realized that I was listening to about 45 minutes to an hour of programming on my local NPR affiliate (WAMU) each morning and afternoon, and so I decided to donate $10 per month. Doesn’t sound like much, but at $120 bucks per year, that’s more than I spend on any other single news media product with the exception of The Wall Street Journal. So, when there’s value in a media product, I’ll pay for it, and I find great value in NPR’s “long-form” broadcast journalism, despite its occasional political slant on some issues.

In many ways, the Corporation for Public Broadcasting, which supports NPR and PBS, has the perfect business model for the age of information abundance. Philanthropic models — which rely on support for foundational benefactors, corporate underwriters, individual donors, and even government subsidy — can help diversify the funding base at a time when traditional media business models — advertising support, subscriptions, and direct sales — are being strained. This is why many private media operations are struggling today; they’re experiencing the ravages of gut-wrenching marketplace / technological changes and searching for new business models to sustain their operations. By contrast, CPB, NPR, and PBS are better positioned to weather this storm since they do not rely on those same commercial models.

Nonetheless, NPR and PBS and the supporters of increased “pubic media” continue to claim that they are in peril and that increased support — especially public subsidy — is essential to their survival. For example, consider an editorial in today’s Washington Post making “The Argument for Funding Public Media,” which was penned by Laura R. Walker, the president and chief executive of New York Public Radio, and Jaclyn Sallee, the president and chief executive of Officer Kohanic Broadcast Corp. in Anchorage. They argue: Continue reading →

I absolutely loved this quote about the dangers of regulatory capture from Holman Jenkins in today’s Wall Street Journal in a story (“Let’s Restart the Green Revolution“) about how misguided agricultural / environmental policies are hurting consumers:

When some hear the word “regulation,” they imagine government rushing to the defense of consumers. In the real world, government serves up regulation to those who ask for it, which usually means organized interests seeking to block a competitive threat. This insight, by the way, originated with the left, with historians who went back and reconstructed how railroads in the U.S. concocted federal regulation to protect themselves from price competition. We should also notice that an astonishingly large part of the world has experienced an astonishing degree of stagnation for an astonishingly long time for exactly such reasons.

I’ve just added it to my growing compendium of notable quotations about regulatory capture. It’s essential that we not ignore how — despite the very best of intentions — regulation often has unintended and profoundly anti-consumer / anti-innovation consequences.

This is the second of two essays making “The Case for Internet Optimism.” This essay was included in the book, The Next Digital Decade: Essays on the Future of the Internet (2011), which was edited by Berin Szoka and Adam Marcus of TechFreedom. In my previous essay, which I discussed here yesterday, I examined the first variant of Internet pessimism: “Net Skeptics,” who are pessimistic about the Internet improving the lot of mankind. In this second essay, I take on a very different breed of Net pessimists: “Net Lovers” who, though they embrace the Net and digital technologies, argue that they are “dying” due to a lack of sufficient care or collective oversight. In particular, they fear that the “open” Internet and “generative” digital systems are giving way to closed, proprietary systems, typically run by villainous corporations out to erect walled gardens and quash our digital liberties. Thus, they are pessimistic about the long-term survival of the Internet that we currently know and love.

Leading exponents of this theory include noted cyberlaw scholars Lawrence Lessig, Jonathan Zittrain, and Tim Wu. I argue that these scholars tend to significantly overstate the severity of this problem (the supposed decline of openness or generativity, that is) and seem to have very little faith in the ability of such systems to win out in a free market. Moreover, there’s nothing wrong with a hybrid world in which some “closed” devices and platforms remain (or even thrive) alongside “open” ones. Importantly, “openness” is a highly subjective term, and a constantly evolving one. And many “open” systems or devices are as perfectly open as these advocates suggest.

Finally, I argue that it’s likely that the “openness” advocated by these advocates will devolve into expanded government control of cyberspace and digital systems than that unregulated systems will become subject to “perfect control” by the private sector, as they fear. Indeed, the implicit message in the work of all these hyper-pessimistic critics is that markets must be steered in a more sensible direction by those technocratic philosopher kings (although the details of their blueprint for digital salvation are often scarce). Thus, I conclude that the dour, depressing “the-Net-is-about-to-die” fear that seems to fuel this worldview is almost completely unfounded and should be rejected before serious damage is done to the evolutionary Internet through misguided government action.

I’ve embedded the entire essay down below in Scribd reader, but it can also be found on TechFreedom’s Next Digital Decade book website and SSRN.

Continue reading →

Here’s the first of two essays I’ve recently penned making “The Case for Internet Optimism.” This essay was included in the book, The Next Digital Decade: Essays on the Future of the Internet (2011), which was edited by Berin Szoka and Adam Marcus of TechFreedom. In these essays, I identify two schools of Internet pessimism: (1) “Net Skeptics,” who are pessimistic about the Internet improving the lot of mankind; and (2) “Net Lovers,” who appreciate the benefits the Net brings society but who fear those benefits are disappearing, or that the Net or openness are dying. (Regular readers of this blog will be familiar with these themes since I sketched them out in previous essays here such as, “Are You an Internet Optimist or Pessimist?” and “Two Schools of Internet Pessimism.”) The second essay is here.

This essay focuses on the first variant of Internet pessimism, which is rooted in general skepticism about the supposed benefits of cyberspace, digital technologies, and information abundance. The proponents of this pessimistic view often wax nostalgic about some supposed “good ‘ol days” when life was much better (although they can’t seem to agree when those were). At a minimum, they want us to slow down and think twice about life in the Information Age and how it’s personally affecting each of us. Occasionally, however, this pessimism borders on neo-Ludditism, with some proponents recommending steps to curtail what they feel is the destructive impact of the Net or digital technologies on culture or the economy. I identify the leading exponents of this view of Internet pessimism and their major works. I trace their technological pessimism back to Plato but argue that their pessimism is largely unwarranted. Humans are more resilient than pessimists care to admit and we learn how to adapt to technological change and assimilate new tools into our lives over time. Moreover, were we really better off in the scarcity era when we were collectively suffering from information poverty? Generally speaking, despite the challenges it presents society, information abundance is a better dilemma to be facing than information poverty. Nonetheless, I argue, we should not underestimate or belittle the disruptive impacts associated with the Information Revolution. But we need to find ways to better cope with turbulent change in a dynamist fashion instead of attempting to roll back the clock on progress or recapture “the good ‘ol days,” which actually weren’t all that good.

Down below, I have embedded the entire chapter in a Scribd reader, but the essay can also be found on the TechFreedom website for the book as well as on SSRN. I have also includes two updated tables that appeared in my old “optimists vs. pessimists” essay. The first lists some of the leading Internet optimists and pessimists and their books. The second table outlines some of the major lines of disagreement between these two camps and I divided those disagreements into (1) Cultural / Social beliefs vs. (2) Economic / Business beliefs.

Continue reading →

A headline in the USA Today earlier this week screamed, “Hello, Big Brother: Digital Sensors Are Watching Us.” It opens with an all too typical techno-panic tone, replete with tales of impending doom:

Odds are you will be monitored today — many times over. Surveillance cameras at airports, subways, banks and other public venues are not the only devices tracking you. Inexpensive, ever-watchful digital sensors are now ubiquitous.

They are in laptop webcams, video-game motion sensors, smartphone cameras, utility meters, passports and employee ID cards. Step out your front door and you could be captured in a high-resolution photograph taken from the air or street by Google or Microsoft, as they update their respective mapping services. Drive down a city thoroughfare, cross a toll bridge, or park at certain shopping malls and your license plate will be recorded and time-stamped.

Several developments have converged to push the monitoring of human activity far beyond what George Orwell imagined. Low-cost digital cameras, motion sensors and biometric readers are proliferating just as the cost of storing digital data is decreasing. The result: the explosion of sensor data collection and storage.

Oh my God! Dust off you copies of the Unabomber Manifesto and run for your shack in the hills!

No, wait, don’t. Let’s instead step back, take a deep breath and think about this. As the article goes on to note, there will certainly be many benefits to our increasing “sensor society.” Advertising and retail activity will become more personalized and offer consumers more customized good and services. I wrote about that here at greater length in my essay on “Smart-Sign Technology: Retail Marketing Gets Sophisticated, But Will Regulation Kill It First?” More importantly, ubiquitous digital sensors and data collection/storage will also increase our knowledge of the world around us exponentially and do wonders for scientific, environmental, and medical research.

But that won’t soothe the fears of those who fear the loss of their privacy and the rise of a surveillance society in which our every move is watched or tracked. So, let’s talk about what those of you who feel that way want to do about it.

Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.