This article originally appeared at techfreedom.org.

Twenty years ago today, President Clinton signed the Telecommunications Act of 1996. John Podesta, his chief of staff immediately saw the problem: “Aside from hooking up schools and libraries, and with the rather major exception of censorship, Congress simply legislated as if the Net were not there.”

Here’s our take on what Congress got right (some key things), what it got wrong (most things), and what an update to the key laws that regulate the Internet should look like. The short version is:

- End FCC censorship of “indecency”

- Focus on promoting competition

- Focus regulation on consumers rather than arbitrary technological silos or political whim

- Get the FCC out of the business of helping government surveillance

Trying, and Failing, to Censor the Net

Good: The Act is most famous for Section 230, which made Facebook and Twitter possible. Without 230, such platforms would have been held liable for the speech of their users — just as newspapers are liable for letters to the editor. Trying to screen user content would simply have been impossible. Sharing user-generated content (UGC) on sites like YouTube and social networks would’ve been tightly controlled or simply might never have taken off. Without Section 230, we might all still be locked in to AOL!

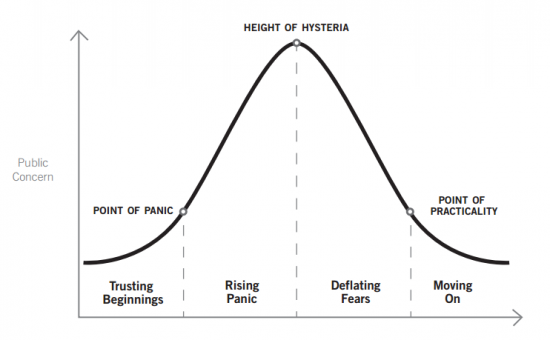

Bad: Still, the Act was very much driven by a technopanic over “protecting the children.”

- Internet Censorship. 230 was married to a draconian crackdown on Internet indecency. Aimed at keeping pornography away from minors, the rest of the Communications Decency Act — rolled into the Telecom Act — would have required age verification of all users, not just on porn sites, but probably any UGC site, too. Fortunately, the Supreme Court struck this down as a ban on anonymous speech online.

- Broadcast Censorship. Unfortunately, the FCC is still in the censorship business for traditional broadcasting. The 1996 Act did nothing to check the agency’s broad powers to decide how long a glimpse of a butt or a nipple is too much for Americans’ sensitive eyes.

Unleashing Competition—Slowly

Good: Congress unleashed over $1.3 trillion in private broadband investment, pitting telephone companies and cable companies against each other in a race to serve consumers — for voice, video andbroadband service.

- Legalizing Telco Video. In 1984, Congress had (mostly) prohibited telcos from providing video service — largely on the assumption that it was a monopoly. Congress reversed that, which eventually meant telcos had the incentive to invest in networks that could carry video — and super-fast broadband.

- Breaking Local Monopolies. Congress also barred localities from blocking new entry by denying a video “franchise.”

- Encouraging Cable Investment. The 1992 Cable Act had briefly imposed price regulation on basic cable packages. This proved so disastrous that the Democratic FCC retreated — but only after killing a cycle of investment and upgrades, delaying cable modem service by years. In 1996, Congress finally put a stake through the heart of such rate regulation, removing investment-killing uncertainty.

Bad: While the Act laid the foundations for what became facilities-based network competition, its immediate focus was pathetically short-sighted: trying to engineer artificial competition for telephone service.

- Unbundling Mandates. The Act created an elaborate set of requirements that telephone companies “unbundle” parts of their networks so that resellers could use them, at sweetheart prices, to provide “competitive” service. The FCC then spent the next nine years fighting over how to set these rates.

- Failure of Vision. Meanwhile, competing networks provided fierce competition: cable providers gained over half the telephony market with a VoIP service, and 47% of customers have simply cut the cord — switching entirely to wireless. Though the FCC refuses to recognize it, broadband is becoming more competitive, too: 2014 saw telcos invest in massive upgrades, bringing 25–75 Mbps speeds to more than half the country by pushing fiber closer to homes. The cable-telco horse race is fiercer than ever — and Google Fiber has expanded its deployment of a third pipe to the home, while cable companies are upgrading to provide gigabit-plus speeds and wireless broadband has become a real alternative for rural America.

- Delaying Fiber. The greatest cost of the FCC’s unbundling shenanigans was delaying the major investments telcos needed to keep up with cable. Not until 2003 did the FCC make clear that it would not impose unbundling mandates on fiber — which pushed Verizon to begin planning its FiOS fiber-to-the-home network. The other crucial step came in 2006, when the Commission finally clamped down on localities that demanded lavish ransoms for allowing the deployment of new networks, which stifled competition.

Regulation

Good: With the notable exception of unbundling mandates, the Act was broadly deregulatory.

- General thrust. Congress could hardly have been more clear: “It is the policy of the United States… to preserve the vibrant and competitive free market that presently exists for the Internet and other interactive computer services, unfettered by Federal or State regulation.”

- Ongoing Review & Deregulation. Congress gave the FCC broad discretion to ratchet down regulation to promote competition.

Bad: The Clinton Administration realized that technological change was rapidly erasing the lines separating different markets, and had proposed a more technology-neutral approach in 1993. But Congress rejected that approach. The Act continued to regulate by dividing technologies into silos: broadcasting (Title III), telephone (Title II) and cable (Title VI). Title I became a catch-all for everything else. Crucially, Congress didn’t draw a clear line between Title I and Title II, setting in motion a high-stakes fight that continues today.

- Away from Regulatory Silos. Bill Kennard, Clinton’s FCC Chairman, quickly saw just how obsolete the Act was. His 1999 Strategic Plan remains a roadmap for FCC reform.

- Away from Title II. Kennard also indicated that he favored placing all broadband in Title I — mainly because he understood that Title II was designed for a monopoly and would tend to perpetuate it. Vibrant competition between telcos and cable companies could happen only under Title I. But it was the Bush FCC that made this official, classifying cable modem as Title I in 2002 and telco DSL in 2005.

- Net Neutrality Confusion. The FCC spent a decade trying to figure out how to regulate net neutrality, losing in court twice, distracting the agency from higher priorities — like promoting broadband deployment and adoption — and making telecom policy, once an area of non-partisan pragmatism, a fiercely partisan ideological cesspool.

- Back to Title II. In 2015, the FCC reclassified broadband under Title II — not because it didn’t have other legal options for regulating net neutrality, but because President Obama said it should. He made the issue part of his re-assertion of authority after Democrats lost the 2014 midterm elections. Net neutrality and Title II became synonymous, even though they have little to do with each other. Now, the FCC’s back in court for the third time.

- Inventing a New Act. Unless the courts stop it, the FCC will exploit the ambiguities of the ‘96 Act to essentially write a new Act out of thin air: regulating way up with Title II, using its forbearance powers to temporarily suspend politically toxic parts of the Act (like unbundling), and inventing wholly new rules that give the FCC maximum discretion—while claiming the power to do anything that somehow promotes broadband. The FCC calls this all “modernization” but it’s really a staggering power grab that allows the FCC to control the Internet in the murkiest way possible.

- Bottom line: The 1996 Act gives the FCC broad authority to regulate in the “public interest,” without effectively requiring the FCC to gauge the competitive effects of what it does. The agency’s stuck in a kind of Groundhog Day of over-regulation, constantly over-doing it without ever learning from its mistakes.

Time for a #CommActUpdate

Censorship. The FCC continues to censor dirty words and even brief glimpses of skin on television because of a 1978 decision that assumes parents are helpless to control their kids’ media consumption. Today, parental control tools make this assumption obsolete: parents can easily block programming marked as inappropriate. Congress should require the FCC to focus on outright obscenity — and let parents choose for themselves.

Competition. If the 1996 Act served to allow two competing networks, a rewrite should focus on driving even fiercer cable-telco competition, encouraging Google Fiber and others to build a third pipe to the home, and making wireless an even stronger competitor.

- Title II. If you wanted to protect cable companies from competition, you couldn’t find a better way to do it than Title II. Closing that Pandora’s Box forever will encourage companies like Google Fiber to enter the market. But Congress needs to finish what the 1996 Act started: it’s not enough to stop localities from denying franchises video service (and thus broadband, too).

- Local Barriers. Congress should crack down on the moronic local practices that have made deployment of new networks prohibitive — learning from the success of Google Fiber cities, which have cut red tape, lowered fees and generally gotten out of the way. Pending bipartisan legislationwould make these changes for federal assets, and require federal highway projects to include Dig Once conduits to make fiber deployment easier. That’s particularly helpful for rural areas, which the FCC has ignored, but making deployment easier inside cities will require making municipal rights of way easier to use. Instead of rushing to build their own broadband networks, localities should have to first at least try to stimulate private deployment.

Regulation. Technological silos made little sense in 1993. Today, they’re completely obsolete.

- Unchecked Discretion. The FCC’s right about one thing: rigid rules don’t make sense either, given how fast technology is changing. But giving the FCC sweeping discretion is even more dangerous: it makes regulating the Internet inherently political, subject to presidential whim and highly sensitive to elections.

- The Fix. There’s a simple solution: write clear standards that let the FCC work across all communications technologies, but that require the FCC to prove that its tinkering actually makes consumers better off. As long as the FCC can do whatever it claims is in the “public interest,” the Internet will never be safe.

- Rethinking the FCC. Indeed, Congress should seriously consider breaking up the FCC, transferring its consumer protection functions to the Federal Trade Commission and its spectrum functions to the Commerce Department.

Encryption. Since 1994, the FCC has had the power to require “telecommunications services” to be wiretap-ready — and the discretion to decide how to interpret that term. Today, the FBI is pushing for a ban on end-to-end encryption — so law enforcement can get backdoor access into services like Snapchat. Unfortunately, foreign governments and malicious hackers could use those backdoors, too. Congress is stalling, but the FCC could give law enforcement exactly what it wants — using the same legal arguments it used to reclassify mobile broadband under Title II. Law enforcement is probably already using this possibility to pressure Internet companies against adopting secure encryption. Congress should stop the FCC from requiring back doors.

As the Industrial Revolution revealed, leaps in economic and human growth cannot be planned. They arise from societies that reward risk takers and legal systems that accommodate change. Our ability to achieve progress is directly proportional to our willingness to embrace and benefit from technological innovation, and it is a direct result of getting public policies right.

As the Industrial Revolution revealed, leaps in economic and human growth cannot be planned. They arise from societies that reward risk takers and legal systems that accommodate change. Our ability to achieve progress is directly proportional to our willingness to embrace and benefit from technological innovation, and it is a direct result of getting public policies right.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.