My thanks to both Maria H. Andersen and Michael Sacasas for their thoughtful responses to my recent Forbes essay on “10 Things Our Kids Will Never Worry About Thanks to the Information Revolution.” They both go point by point through my Top 10 list and offer an alternative way of looking at each of the trends I identify. What their responses share in common is a general unease with the hyper-optimism of my Forbes piece. That’s understandable. Typically in my work on technological “optimism” and “pessimism” — and yes, I admit those labels are overly simplistic — I always try to strike a sensible balance between pollyannism and hyper-pessimism as it pertains to the impact of technological change on our culture and economy. I have called this middle ground position “pragmatic optimism.” In my Forbes essay, however, I was in full-blown pollyanna mode. That doesn’t mean I don’t generally feel very positive about the changes I itemized in that essay, rather, I just didn’t have the space in a 1,000-word column to identify the tradeoffs inherent in each trend. Thus, Andersen and Sacasas are rightfully pushing back against my lack of balance.

But there is a problem with their slightly pessimistic pushback, too. To better explain my own position and respond to Andersen and Sacasas, let me return to the story we hear again and again in discussion about technological change: the well-known allegorical tale from Plato’s Phaedrus about the dangers of the written word. In the tale, the god Theuth comes to King Thamus and boasts of how Theuth’s invention of writing would improve the wisdom and memory of the masses relative to the oral tradition of learning. King Thamus shot back, “the discoverer of an art is not the best judge of the good or harm which will accrue to those who practice it.” King Thamus then passed judgment himself about the impact of writing on society, saying he feared that the people “will receive a quantity of information without proper instruction, and in consequence be thought very knowledgeable when they are for the most part quite ignorant.”

After recounting Plato’s allegory in my essay, “Are You An Internet Optimist or Pessimist? The Great Debate over Technology’s Impact on Society,” I noted how this same tension has played out in every subsequent debate about the impact of a new technology on culture, values, morals, language, learning, and so on. It is a never-ending cycle. Continue reading →

In my ongoing work on technopanics, I’ve frequently noted how special interests create phantom fears and use “threat inflation” in an attempt to win attention and public contracts. In my next book, I have an entire chapter devoted to explaining how “fear sells” and I note how often companies and organizations incite fear to advance their own ends. Cybersecurity and child safety debates are littered with examples.

In their recent paper, “Loving the Cyber Bomb? The Dangers of Threat Inflation in Cybersecurity Policy,” my Mercatus Center colleagues Jerry Brito and Tate Watkins argued that “a cyber-industrial complex is emerging, much like the military-industrial complex of the Cold War.” As Stefan Savage, a Professor in the Department of Computer Science and Engineering at the University of California, San Diego, told The Economist magazine, the cybersecurity industry sometimes plays “fast and loose” with the numbers because it has an interest in “telling people that the sky is falling.” In a similar vein, many child safety advocacy organizations use technopanics to pressure policymakers to fund initiatives they create. [Sometimes I can get a bit snarky about this.] Continue reading →

[NOTE: The following is a template for how to script congressional testimony when invited to speak about online safety issues.]

Mr. Chairman and members of the Committee, thank you inviting me here today to testify about the most important issue to me and everyone in this room: Our children.

There is nothing I care more about than the future of our children. Like Whitney Houston, “I believe the children are our future.”

Mr. Chairman, I remember with fondness the day my little Johnny and Jannie came into this world. They were my little miracles. Gifts from God, I say. At the moment of birth, my wife… oh, well, I could tell you all about it someday but suffice it to say it was a beautiful scene, with the exception of all the amniotic fluid and blood everywhere. I wept for days.

Today my kids are (mention ages of each) and they are the cutest little angels on God’s green Earth. (NOTE: At this point it would be useful for you to hold up a picture of your kids, preferably with them cuddling with cute stuffed animals, a kitten, or petting a pony as in the example below. Alternatively, use a picture taken at a major attraction located in the Chairman’s congressional district.) Continue reading →

Mark Thompson has a new essay up over at Time on “Cyber War Worrywarts” in which he argues that in debates about cybersecurity, “the ratio of scaremongers to calm logic [is] currently about a 2-to-1 edge in favor of the Jules Verne crowd.” He’s right. In fact, I used my latest Forbes essay to document some of the panicky rhetoric and examples of “threat inflation” we currently see at work in debates over cybersecurity policy. “Threat inflation” refers to the artificial escalation of dangers or harms to society or the economy and doom-and-gloom rhetoric is certainly on the rise in this arena.

I begin my essay by noting how “It has become virtually impossible to read an article about cybersecurity policy, or sit through any congressional hearing on the issue, without hearing prophecies of doom about an impending “Digital Pearl Harbor,” a “cyber Katrina,” or even a “cyber 9/11.”” Meanwhile, Gen. Michael Hayden, who led the National Security Administration and Central Intelligence Agency under president George W. Bush, recently argued that a “digital Blackwater” may be needed to combat the threat of cyberterrorism.

These rhetorical claims are troubling to me for several reasons. I build on the concerns raised originally in an important Mercatus Center paper by my colleagues Jerry Brito and Tate Watkins, which warns of the dangers of threat inflation in policy debates and the corresponding rise of the “cybersecurity industrial complex.” In my Forbes essay, I note that: Continue reading →

I enjoyed this Wall Street Journal essay by Daniel H. Wilson on “The Terrifying Truth About New Technology.” It touches on many of the themes I’ve discussed here in my essays on techno-panics, fears about information overload, and the broader battle throughout history between technology optimists and pessimists regarding the impact of new technologies on culture, life, and learning. Wilson correctly notes that:

The fear of the never-ending onslaught of gizmos and gadgets is nothing new. The radio, the telephone, Facebook — each of these inventions changed the world. Each of them scared the heck out of an older generation. And each of them was invented by people who were in their 20s.

He continues:

Young people adapt quickly to the most absurd things. Consider the social network Foursquare, in which people not only willingly broadcast their location to the world but earn goofy virtual badges for doing so. My first impulse was to ignore Foursquare—for the rest of my life, if I have to.

And that’s the problem. As we get older, the process of adaptation slows way down. Unfortunately, we depend on alternating waves of assimilation and accommodation to adapt to a constantly changing world. For [developmental psychologist Jean] Piaget, this balance between what’s in the mind and what’s in the environment is called equilibrium. It’s pretty obvious when equilibrium breaks down. For example, my grandmother has phone numbers taped to her cellphone. Having grown up with the Rolodex (a collection of numbers stored next to the phone), she doesn’t quite grasp the concept of putting the numbers in the phone.

Why are we so nostalgic about the technology we grew up with? Old people say things like: “This new technology is stupid. I liked (new, digital) technology X better when it was called (old, analog) technology Y. Why, back in my day….” Which leads inexorably to, “I just don’t get it.”

There’s a simple explanation for this phenomenon: “adventure window.” At a certain age, that which is familiar and feels safe becomes more important to you than that which is new, different, and exciting. Think of it as “set-in-your-ways syndrome.”

Continue reading →

Facebook announced yesterday that it had finished most of the global roll-out, begun in the U.S. last December. Now ZDNet reports that European Privacy regulators are already planning a probe of this. Emil Protalinski writes:

“Tags of people on pictures should only happen based on people’s prior consent and it can’t be activated by default,” Gerard Lommel, a Luxembourg member of the so-called Article 29 Data Protection Working Party, told BusinessWeek. Such automatic tagging “can bear a lot of risks for users” and the group of European data protection officials will “clarify to Facebook that this can’t happen like this.”

No doubt our friends at the Extra-Paternalist Internet Cops (EPIC) will jump into the fray with another of their many complaints to the FTC, dripping with outrage that Facebook has “opted us into” this feature. But what’s the big deal, really? Emil explains how things work:

When you upload new photos, Facebook uses software similar to that found in many photo editing tools to match your new photos to other photos you’re tagged in. Similar photos are grouped together and, whenever possible, Facebook suggests the name(s) your friend(s) in the photos. In other words, the square that magically finds faces in a photo now suggests names of your Facebook friends to streamline the tagging process, especially with the same friends in multiple uploaded photos.

Lifehacker explains how easy it is for Facebook users to opt-out of having their friends seeing the automatically generated suggestion to tag their face (as Facebook did in its own announcement):

- Head your Privacy Settings and click on Customize Settings.

- Scroll down to the “Suggest Photos of Me to Friends” setting and hit “Edit Settings”.

- In the drop-down on the right, hit “Disable”.

See the screenshots here. So, in short: The feature that’s upsetting the privacy regulationistas is a feature that saves us time and effort in tagging our friends in photos we upload—unless our friends have opt-outed of having their photos auto-suggested.

Continue reading →

John Naughton, a professor at the Open University in the U.K. and a columnist for the U.K. Guardian, has a new essay out entitled “Only a Fool or Nicolas Sarkozy Would Go to War with Facebook.” I enjoyed it because it touches upon two interrelated concepts that I’ve spent years writing about: “moral panic” and “third-person effect hypothesis” (although Naughton doesn’t discuss the latter by name in his piece.) To recap, let’s define those terms:

“Moral Panic” / “Techno-Panic“: Christopher Ferguson, a professor at Texas A&M’s Department of Behavioral, Applied Sciences and Criminal Justice, offers the following definition: “A moral panic occurs when a segment of society believes that the behavior or moral choices of others within that society poses a significant risk to the society as a whole.” By extension, a “techno-panic” is simply a moral panic that centers around societal fears about a specific contemporary technology (or technological activity) instead of merely the content flowing over that technology or medium.

“Third-Person Effect Hypothesis“: First formulated by psychologist W. Phillips Davison in 1983, “this hypothesis predicts that people will tend to overestimate the influence that mass communications have on the attitudes and behavior of others. More specifically, individuals who are members of an audience that is exposed to a persuasive communication (whether or not this communication is intended to be persuasive) will expect the communication to have a greater effect on others than on themselves.” While originally formulated as an explanation for how people convinced themselves “media bias” existed where none was present, the third-person-effect hypothesis has provided an explanation for other phenomenon and forms of regulation, especially content censorship. Indeed, one of the most intriguing aspects about censorship efforts historically is that it is apparent that many censorship advocates desire regulation to protect others, not themselves, from what they perceive to be persuasive or harmful content. That is, many people imagine themselves immune from the supposedly ill effects of “objectionable” material, or even just persuasive communications or viewpoints they do not agree with, but they claim it will have a corrupting influence on others.

All my past essays about moral panics and third-person effect hypothesis can be found here. These theories are also frequently on display in the work of some of the “Internet pessimists” I have written about here, as well as in many bills and regulatory proposals floated by lawmakers. Which brings us back to the Naughton essay.

Continue reading →

I’m currently plugging away at a big working paper with the running title, “Argumentum in Cyber-Terrorem: A Framework for Evaluating Fear Appeals in Internet Policy Debates.” It’s an attempt to bring together a number of issues I’ve discussed here in my past work on “techno-panics” and devise a framework to evaluate and address such panics using tools from various disciplines. I begin with some basic principles of critical argumentation and outline various types of “fear appeals” that usually represent logical fallacies, including: argumentum in terrorem, argumentum ad metum, and argumentum ad baculum. But I’ll post more about that portion of the paper some other day. For now, I wanted to post a section of that paper entitled “The Problem with the Precautionary Principle.” I’m posting what I’ve got done so far in the hopes of getting feedback and suggestions for how to improve it and build it out a bit. Here’s how it begins…

________________

The Problem with the Precautionary Principle

“Isn’t it better to be safe than sorry?” That is the traditional response of those perpetuating techno-panics when their fear appeal arguments are challenged. This response is commonly known as “the precautionary principle.” Although this principle is most often discussed in the field of environment law, it is increasingly on display in Internet policy debates.

The “precautionary principle” basically holds that since every technology and technological advance poses some theoretical danger or risk, public policy should be crafted in such a way that no possible harm will come from a particular innovation before further progress is permitted. In other words, law should mandate “just play it safe” as the default policy toward technological progress. Continue reading →

[UPDATE Feb. 2012: This little essay eventually led to an 80-page working paper, “Technopanics, Threat Inflation, and the Danger of an Information Technology Precautionary Principle.”]

__________

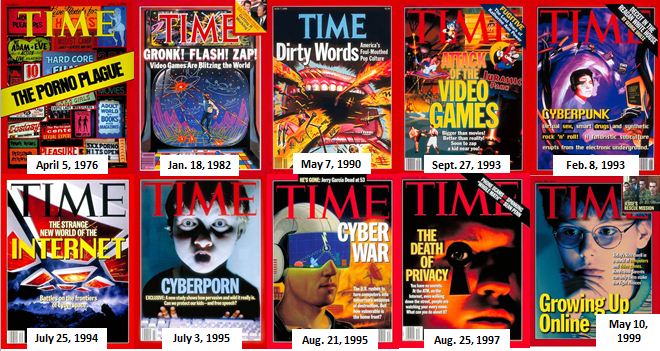

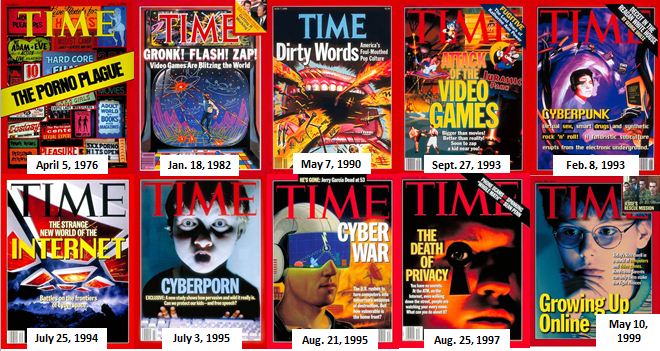

In this essay, I will suggest that (1) while “moral panics” and “techno-panics” are nothing new, their cycles seem to be accelerating as new communications and information networks and platforms proliferate; (2) new panics often “crowd-out” or displace old ones; and (3) the current scare over online privacy and “tracking” is just the latest episode in this ongoing cycle.

What Counts as a “Techno-Panic”?

First, let’s step back and define our terms. Christopher Ferguson, a professor at Texas A&M’s Department of Behavioral, Applied Sciences and Criminal Justice, offers the following definition: “A moral panic occurs when a segment of society believes that the behavior or moral choices of others within that society poses a significant risk to the society as a whole.” By extension, a “techno-panic” is simply a moral panic that centers around societal fears about a specific contemporary technology (or technological activity) instead of merely the content flowing over that technology or medium. In her brilliant 2008 essay on “The MySpace Moral Panic,” Alice Marwick noted: Continue reading →

A headline in the USA Today earlier this week screamed, “Hello, Big Brother: Digital Sensors Are Watching Us.” It opens with an all too typical techno-panic tone, replete with tales of impending doom:

Odds are you will be monitored today — many times over. Surveillance cameras at airports, subways, banks and other public venues are not the only devices tracking you. Inexpensive, ever-watchful digital sensors are now ubiquitous.

They are in laptop webcams, video-game motion sensors, smartphone cameras, utility meters, passports and employee ID cards. Step out your front door and you could be captured in a high-resolution photograph taken from the air or street by Google or Microsoft, as they update their respective mapping services. Drive down a city thoroughfare, cross a toll bridge, or park at certain shopping malls and your license plate will be recorded and time-stamped.

Several developments have converged to push the monitoring of human activity far beyond what George Orwell imagined. Low-cost digital cameras, motion sensors and biometric readers are proliferating just as the cost of storing digital data is decreasing. The result: the explosion of sensor data collection and storage.

Oh my God! Dust off you copies of the Unabomber Manifesto and run for your shack in the hills!

No, wait, don’t. Let’s instead step back, take a deep breath and think about this. As the article goes on to note, there will certainly be many benefits to our increasing “sensor society.” Advertising and retail activity will become more personalized and offer consumers more customized good and services. I wrote about that here at greater length in my essay on “Smart-Sign Technology: Retail Marketing Gets Sophisticated, But Will Regulation Kill It First?” More importantly, ubiquitous digital sensors and data collection/storage will also increase our knowledge of the world around us exponentially and do wonders for scientific, environmental, and medical research.

But that won’t soothe the fears of those who fear the loss of their privacy and the rise of a surveillance society in which our every move is watched or tracked. So, let’s talk about what those of you who feel that way want to do about it.

Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.