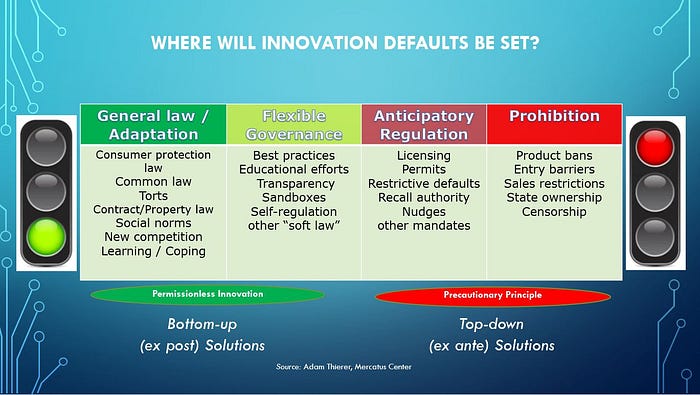

There are two general types of technological governance that can be used to address challenges associated with artificial intelligence (AI) and computational sciences more generally. We can think of these as “on the ground” (bottom-up, informal “soft law”) governance mechanisms versus “on the books” (top-down, formal “hard law”) governance mechanisms.

Unfortunately, heated debates about the latter type of governance often divert attention from the many ways in which the former can (or already does) help us address many of the challenges associated with emerging technologies like AI, machine learning, and robotics. It is important that we think harder about how to optimize these decentralized soft law governance mechanisms today, especially as traditional hard law methods are increasingly strained by the relentless pace of technological change and ongoing dysfunctionalism in the legislative and regulatory arenas.

On the Grounds vs. On the Books Governance

Let’s unpack these “on the ground” and “on the books” notions a bit more. I am borrowing these descriptors from an important 2011 law review article by Kenneth A. Bamberger and Deirdre K. Mulligan, which explored the distinction between what they referred to as “Privacy on the Books and on the Ground.” They identified how privacy best practices were emerging in a decentralized fashion thanks to the activities of corporate privacy officers and privacy associations who helped formulate best practices for data collection and use.

The growth of privacy professional bodies and nonprofit organizations — especially the International Association of Privacy Professionals (IAPP) — helped better formalize privacy best practices by establishing and certifying internal champions to uphold key data-handling principles with organizations. By 2019, the IAPP had over 50,000 trained members globally, and its numbers keep swelling. Today, it is quite common to find Chief Privacy Officers throughout the corporate, governmental, and non-profit world.

These privacy professionals work together and in conjunction with a wide diversity of other players to “bake-in” widely-accepted information collection/ use practices within all these organizations. With the help of IAPP and other privacy advocates and academics, these professionals also look to constantly refine and improve their standards to account for changing circumstances and challenges in our fast-paced data economy. They also look to ensure that organizations live up to commitments they have made to the public or even governments to abide by various data-handling best practices.

Soft Law vs. Hard Law

These “on the ground” efforts have helped usher in a variety of corporate social responsibility best practices and provide a flexible governance model that can be a compliment to, or sometimes even a substitute for, formal “on the books” efforts. We can also think of this as the difference between soft law and hard law.

Soft law refers to agile, adaptable governance schemes for emerging technology that create substantive expectations and best practices for innovators without regulatory mandates. Soft law can take many forms, including guidelines, best practices, agency consultations & workshops, multistakeholder initiatives, and other experimental types of decentralized, non-binding commitments and efforts.

Soft law has become a bit of a gap-filler in the U.S. as hard law efforts fail for various reasons. The most obvious explanations for why the role of hard law governance has shrunk is that it’s just very hard for law to keep up with fast-moving technological developments today. This is known as the pacing problem. Many scholars have identified how the pacing problem gives rise to a “governance gap” or “competency trap” for policymakers because, just as quickly as they are coming to grips with new technological developments, other technologies are emerging quickly on their heels.

Think of modern technologies — especially informational and computational technologies — like a series of waves that come flowing in to shore faster and faster. As soon as one wave crests and then crashes down, another one comes right after it and soaks you again before you’ve had time to recover from the daze of the previous ones hitting you. In a world of combinatorial innovation, in which technologies build on top of one another in a symbiotic fashion, this process becomes self-reinforcing and relentless. For policymakers, this means that just when they’ve worked their way up one technological learning curve, the next wave hits and forces them to try to quickly learn about and prepare for the next one that has arrived. Lawmakers are often overwhelmed by this flood of technological change, making it harder and harder for policies to get put in place in a timely fashion — and equally hard to ensure that any new or even existing policies stay relevant as all this rapid-fire innovation continues.

Legislative dysfunctionalism doesn’t help. Congress has a hard time advancing bills on many issues, and technical matters often get pushed to the bottom of the priorities list. The end result is that Congress has increasingly become a non-actor on tech policy in the U.S. Most of the action lies elsewhere.

What’s Your Backup Plan?

This means there is a powerful pragmatic case for embracing soft law efforts that can at least provide us with some “on the ground” governance efforts and practices. Increasingly, soft law is filling the governance gap because hard law is failing for a variety of reasons already identified. Practically speaking, even if you are dead set on imposing a rigid, top-down, technocratic regulatory regime on any given sector or technology, you should at least have a backup plan in mind if you can’t accomplish that.

This is why privacy governance in the United States continues to depend heavily on such soft law efforts to fill the governance vacuum after years of failed attempts to enact a formal federal privacy law. While many academics and others continue to push for such an over-arching data handling law, bottom-up soft law efforts have played an important role in balancing privacy and innovation.

In a similar way, “on the ground” governance efforts are already flourishing for artificial intelligence and machine learning as policymakers continue to very slowly consider whether new hard law initiatives are wise or even possible. For example, congressional lawmakers have been considering a federal regulatory framework for driverless cars for the past several sessions of Congress. Many people in Congress and in academic circles agree that a federal framework is needed, if for no other reason than to preempt the much-dreaded specter of a patchwork of inconsistent state and local regulatory policies. With so much bipartisan agreement out there on driverless car legislation, it would seem like a federal bill would be a slam dunk. For that reason, year in and year out, people always predict: this is the year we’ll get driverless car legislation! And yet, it never happens due to a combination of special interest opposition from unions and trial lawyers, in addition to the pacing problem issue and Congress focusing its limited attention on other issues.

This is also already true for algorithmic regulation. We hear lots of calls to do something, but it remains unclear what that something is or whether it will get done any time soon. If we could not get a privacy bill through Congress after at least a dozen years of major efforts, chances are that broad-based AI regulation is going to be equally challenging.

Soft Law for AI is Exploding

Thus, soft law will likely fill the governance gap for AI. It already is. I’m working on a new book that documents the astonishing array of soft law mechanisms already in place or being developed to address various algorithmic concerns. I can’t seem to finish the book because there is just so much going on related to soft law governance efforts for algorithmic systems. As Mark Coeckelbergh noted in his recent book on AI Ethics, there’s been an “avalanche of initiatives and policy documents” around AI ethics and best practices in recent years. It is a bit overwhelming, but the good news is that there is a lot of consistency in these governance efforts.

To illustrate, a 2019 survey by a group of researchers based in Switzerland analyzed 84 AI ethical frameworks and found “a global convergence emerging around five ethical principles (transparency, justice and fairness, non-maleficence, responsibility and privacy).” A more recent 2021 meta-survey by a team of Arizona State University (ASU) legal scholars reviewed an astonishing 634 soft law AI programs that were formulated between 2016–2019. 36 percent of these efforts were initiated by governments, with the others being led by non-profits or private sector bodies. Echoing the findings from the Swiss researchers, the ASU report found widespread consensus among these soft law frameworks on values such as transparency and explainability, ethics/rights, security, and bias. This makes it clear that there is considerable consistency among ethical soft law frameworks in that most of them focus on a core set of values to embed within AI design. The UK-based Alan Turing Institute boils their list down to four “FAST Track Principles”: Fairness, Accountability, Sustainability, and Transparency.

The ASU scholars noted how ethical best practices for product design already influence developers today by creating powerful norms and expectations about responsible product design. “Once a soft law program is created, organizations may seek to enforce it by altering how their employees or representatives perform their duties through the creation and implementation of internal procedures,” they note. “Publicly committing to a course of action is a signal to society that generates expectations about an organization’s future actions.”

This is important because many major trade associations and individual companies have been formulating governance frameworks and ethical guidelines for AI development and use. For example, among large trade associations, the U.S. Chamber of Commerce, the Business Roundtable, the BSA | The Software Alliance, and ACT (The App Association) have all recently released major AI best practice guidelines. Notable corporate efforts to adopt guidelines for ethical AI practices include statements or frameworks by IBM, Intel, Google, Microsoft, Salesforce, SAP, and Sony, to just name a few. They are also creating internal champions to push AI ethics though either the appointment of Chief Ethical Officers, the creation of official departments, or both plus additional staff to guide the process of baking-in AI ethics by design.

Once again, there is remarkable consistency among these corporate statements in terms of the best practices and ethical guidelines they endorse. Each trade association or corporate set of guidelines align closely with the core values identified in the hundreds of other soft law frameworks that ASU scholars surveyed. These efforts go a long way toward helping to promote a culture of responsibility among leading AI innovators. We can think of this as the professionalization of AI best practices.

What Soft Law Critics Forget

Some will claim that “on the ground” soft law efforts are not enough, but they typically make two mistakes when saying so.

Their first mistake is thinking that hard law is practical or even optimal for fast-paced, highly mercurial AI and ML technologies. It’s not just that the pacing problem necessitates new thinking about governance. Critics fail to understand how hard law would likely significantly undermine algorithmic innovation because algorithmic systems can change by the minute and require a more agile and adaptive system of governance by their very nature.

This is a major focus of my book and I previously published a draft chapter from my book on “The Proper Governance Default for AI,” and another essay on “Why the Future of AI Will Not Be Invented in Europe.” These essays explain why a Precautionary Principle-oriented regulatory regime for algorithmic systems would stifle technological development, undermine entrepreneurialism, diminish competition and global competitive advantage, and even have a deleterious impact on our national security goals.

Traditional regulatory systems can be overly rigid, bureaucratic, inflexible, and slow to adapt to new realities. They focus on preemptive remedies that aim to predict the future, and future hypothetical problems that may not ever come about. Worse yet, administrative regulation generally preempts or prohibits the beneficial experiments that yield new and better ways of doing things. When innovators must seek special permission before they offer a new product or service, it raises the cost of starting a new venture and discourages activities that benefit society. We need to avoid that approach if we hope maximize the potential of AI-based technologies.

The second mistake that soft law critics make is that they fail to understand how many hard law mechanisms actually play a role in supporting soft law governance. AI applications already are regulated by a whole host of existing legal policies. If someone does something stupid or dangerous with AI systems, the Federal Trade Commission (FTC) has the power to address “unfair and deceptive practices” of any sort. And state Attorneys General and state consumer protection agencies also routinely address unfair practices and continue to advance their own privacy and data security policies, some of which are often more stringent than federal law.

Meanwhile, several existing regulatory agencies in the U.S. possess investigatory and recall authority that allows them to remove products from the market when certain unforeseen problems manifest themselves. For example, the National Highway Traffic Safety Administration (NHTSA), the Food & Drug Administration (FDA), and Consumer Product Safety Commission (CPSC) all possess broad recall authority that could be used to address risks that develop for many algorithmic or robotic systems. For example, NHTSA is currently using its investigative authority to evaluate Tesla’s claims about “full self-driving” technology and the agency has the power to take action against the company under existing regulations. Likewise, the FDA used its broad authority to crack down on genetic testing company 23andme many years ago. And CPSC and the FTC have broad authority to investigate claims made by innovators, and they’ve already used it. It’s not like our expansive regulatory state lacks considerable existing power to police new technology. If anything, the power of the administrative state is too broad and amorphous and it can be abused in certain instances.

Perhaps most importantly, our common law system can address other deficiencies with AI-based systems and applications using product defects law, torts, contract law, property law, and class action lawsuits. This is a better way of addressing risks compared to preemptive regulation of general-purpose AI technology because it at least allows the technologies to first develop and then see what actual problems manifest themselves. Better to treat innovators as innocent until proven guilty than the other way around.

There are other thorny issues that deserve serious policy consideration and perhaps even some new rules. But how risks are addressed matters deeply. Before we resort to heavy-handed, legalistic solutions for possible problems, we should exhaust all other potential remedies first.

In other words, “on the ground” soft law government mechanisms and ex post legal solutions should generally trump “ex ante (preemptive, precautionary) regulatory constraints. But we should look for ways to refine and improve soft law governance tools, perhaps through better voluntary certification and auditing regimes to hold developers to a high standard as it pertains to the important AI ethical practices we want them to uphold. This is the path forward to achieve responsible AI innovation without the heavy-handed baggage associated with more formalistic, inflexible, regulatory approaches that are ill-suited for complicated, rapidly-evolving computational and computing technologies.

___________________

Related Reading on AI & Robotics

- Adam Thierer, “My Forthcoming Book on Artificial Intelligence & Robotics Policy,” Medium, July 22, 2022.

- Adam Thierer, “Responses to Jack Clark’s AI Policy Tweetstorm,” Medium, August 8, 2022.

- Adam Thierer, “Why the Future of AI Will Not Be Invented in Europe,” Technology Liberation Front, August 1, 2022.

- Adam Thierer, “How Science Fiction Dystopianism Shapes the Debate over AI & Robotics,” Discourse, July 26, 2022.

- Adam Thierer, “Why is the US Following the EU’s Lead on Artificial Intelligence Regulation?” The Hill, July 21, 2022.

- Adam Thierer, “Algorithmic Auditing and AI Impact Assessments: The Need for Balance,” Medium, July 13, 2022.

- Adam Thierer, “What I Learned about the Power of AI at the Cleveland Clinic,” Medium, May 6, 2022.

- Adam Thierer, Governing Emerging Technology in an Age of Policy Fragmentation and Disequilibrium, American Enterprise Institute (April 2022).

- Adam Thierer and John Croxton, “Elon Musk and the Coming Federal Showdown Over Driverless Vehicles,” Discourse, November 22, 2021.

- Adam Thierer, “A Global Clash of Visions: The Future of AI Policy,” The Hill, May 4, 2021.

- Adam Thierer, “A Brief History of Soft Law in ICT Sectors: Four Case Studies,” Jurimetrics, Vol. 61 (Fall 2021): 79–119.

- Adam Thierer, “U.S. Artificial Intelligence Governance in the Obama–Trump Years,” IEEE Transactions on Technology and Society, Vol, 2, Issue 4 (2021).

- Adam Thierer, “The Worst Regulation Ever Proposed,” The Bridge, September 2019.

- Ryan Hagemann, Jennifer Huddleston Skees & Adam Thierer, “Soft Law for Hard Problems: The Governance of Emerging Technologies in an Uncertain Future,” Colorado Technology Law Journal, Vol. 17 (2018).

- Adam Thierer & Trace Mitchell, “No New Tech Bureaucracy,” Real Clear Policy, September 10, 2020.

- Adam Thierer, “OMB’s AI Guidance Embodies Wise Tech Governance,” Mercatus Center Public Comment, March 13, 2020.

- Adam Thierer, “Europe’s New AI Industrial Policy,” Medium, February 20, 2020.

- Adam Thierer, “Trump’s AI Framework & the Future of Emerging Tech Governance,” Medium, January 8, 2020.

- Adam Thierer, “Soft Law: The Reconciliation of Permissionless & Responsible Innovation,” Chapter 7 in Adam Thierer, Evasive Entrepreneurs & the Future of Governance (Washington, DC: Cato Institute, 2020): 183–240.

- Andrea O’Sullivan & Adam Thierer, “Counterpoint: Regulators Should Allow the Greatest Space for AI Innovation,” Communications of the ACM, Volume 61, Issue 12, (December 2018): 33–35.

- Adam Thierer, Andrea O’Sullivan & Raymond Russell, “Artificial Intelligence and Public Policy,” Mercatus Research (2017).

- Adam Thierer, “Shouldn’t the Robots Have Eaten All the Jobs at Amazon By Now?” Technology Liberation Front, July 26, 2017.

- Adam Thierer, “Are ‘Permissionless Innovation’ and ‘Responsible Innovation’ Compatible?” Technology Liberation Front, July 12, 2017.

- Adam Thierer, “The Growing AI Technopanic,” Medium, April 27, 2017.

- Adam Thierer, “The Day the Machines Took Over,” Medium, May 11, 2017.

- Adam Thierer, “When the Trial Lawyers Come for the Robot Cars,” Slate, June 10, 2016.

- Adam Thierer, “Learning by Doing,” the Process of Innovation & the Future of Employment,” Technology Liberation Front, September 25, 2015.

- Adam Thierer, “Problems with Precautionary Principle-Minded Tech Regulation & a Federal Robotics Commission,” Medium, September 22, 2014.

- Adam Thierer, “On the Line between Technology Ethics vs. Technology Policy,” Technology Liberation Front, August 1, 2013.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.