For my latest Discourse column (“We Really Need To ‘Have a Conversation’ About AI … or Do We?”), I discuss two commonly heard lines in tech policy circles.

For my latest Discourse column (“We Really Need To ‘Have a Conversation’ About AI … or Do We?”), I discuss two commonly heard lines in tech policy circles.

1) “We need to have conversation about the future of AI and the risks that it poses.”

2) “We should get a bunch of smart people in a room and figure this out.”

I note that, if you’ve read enough essays, books or social media posts about artificial intelligence (AI) and robotics—among other emerging technologies—then chances are you’ve stumbled on variants of these two arguments many times over. They are almost impossible to disagree with in theory, but when you start to investigate what they actually mean in practice, they are revealed to be largely meaningless rhetorical flourishes which threaten to hold up meaningful progress on the AI front. I continue on to argue in my essay that:

I’m not at all opposed to people having serious discussions about the potential risks associated with AI, algorithms, robotics or smart machines. But I do have an issue with: (a) the astonishing degree of ambiguity at work in the world of AI punditry regarding the nature of these “conversations,” and, (b) the fact that people who are making such statements apparently have not spent much time investigating the remarkable number of very serious conversations that have already taken place, or which are ongoing, about AI issues.

In fact, it very well could be the case that we have too many conversations going on currently about AI issues and that the bigger problem is instead one of better coordinating the important lessons and best practices that we have already learned from those conversations.

I then unpack each of those lines and explain what is wrong with them in more detail. Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

Running List of My Research on AI, ML & Robotics Policy

by Adam Thierer on July 29, 2022 · Add a Comment

[last updated 3/13/2025 – Check my Medium page for latest posts]

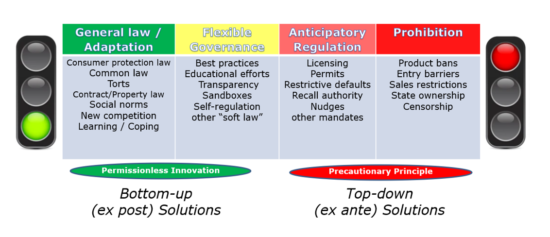

This a running list of all the essays and reports I’ve already rolled out on the governance of artificial intelligence (AI), machine learning (ML), and robotics. Why have I decided to spend so much time on this issue? Because this will become the most important technological revolution of our lifetimes. Every segment of the economy will be touched in some fashion by AI, ML, robotics, and the power of computational science. It should be equally clear that public policy will be radically transformed along the way.

Eventually, all policy will involve AI policy and computational considerations. As AI “eats the world,” it eats the world of public policy along with it. The stakes here are profound for individuals, economies, and nations. As a result, AI policy will be the most important technology policy fight of the next decade, and perhaps next quarter century. Those who are passionate about the freedom to innovate need to prepare to meet the challenge as proposals to regulate AI proliferate.

There are many socio-technical concerns surrounding algorithmic systems that deserve serious consideration and appropriate governance steps to ensure that these systems are beneficial to society. However, there is an equally compelling public interest in ensuring that AI innovations are developed and made widely available to help improve human well-being across many dimensions. And that’s the case that I’ll be dedicating my life to making in coming years.

Here’s the list of what I’ve done so far. I will continue to update this as new material is released: Continue reading →