Copyrights and patents differ from tangible property in fundamental ways. Economically speaking, copyrights and patents are not rivalrous in consumption; whereas all the world can sing the same beautiful song, for instance, only one person can swallow a cool gulp of iced tea. Legally speaking, copyrights and patents exist only thanks to the express terms of the U.S. Constitution and various statutory enactments. In contrast, we enjoy tangible property thanks to common law, customary practices, and nature itself. Even birds recognize property rights in nests. They do not, however, copyright their songs.

Those represent but some of the reasons I have argued that we should call copyright an intellectual privilege, reserving property for things that deserve the label. Another, related reason: Calling copyright property risks eroding that valuable service mark.

Property as a service mark, like FedEx or Hooters? Yes. Thanks to long use, property has come to represent a distinct set of legal relations, including hard and fast rules relating to exclusion, use, alienation, and so forth. Copyright embodies those characteristics imperfectly, if at all. To call it intellectual property risks confusing consumers of legal services—citizens, attorneys, academics, judges, and lawmakers—about the nature of copyright. Worse yet, it confuses them about the nature of property. The property service mark suffers not merely dilution from copyright’s infringing use, but tarnishment, too.

As proof of how copyright threatens to erode property, consider Ben Depooter, Fair Trespass, 111 Col. L. Rev. 1090 (2011). From the abstract:

Trespass law is commonly presented as a relatively straightforward doctrine that protects landowners against intrusions by opportunistic trespassers. . . . This Essay . . . develops a new doctrinal framework for determining the limits of a property owner’s right to exclude. Adopting the doctrine of fair use from copyright law, the Essay introduces the concept of “fair trespass” to property law doctrine. When deciding trespass disputes, courts should evaluate the following factors: (1) the nature and character of the trespass; (2) the nature of the protected property; (3) the amount and substantiality of the trespass; and (4) the impact of the trespass on the owner’s property interest. . . . [T]his novel doctrine more carefully weighs the interests of society in access against the interests of property owners in exclusion.

Although I do not agree with every aspect of Prof. Depooter’s doctrinal analysis, he correctly observes that trespass law includes some fuzzy bits. Nor do I complain about his overall form of argument. It is not a tack I would take, but it was near-inevitable that some legal scholar would eventually argue back from copyright to claim that real property, too, should fall prey to a multi-factor, fact-intensive “fair use” defense. I merely take this opportunity to remind fellow friends of liberty that they can expect more of the same—and more erosion of the property service mark—if they fail to recognize copyrights and patents as no more than intellectual privileges.

[Crossposted at Agoraphilia, Technology Liberation Front, and Intellectual Privilege.]

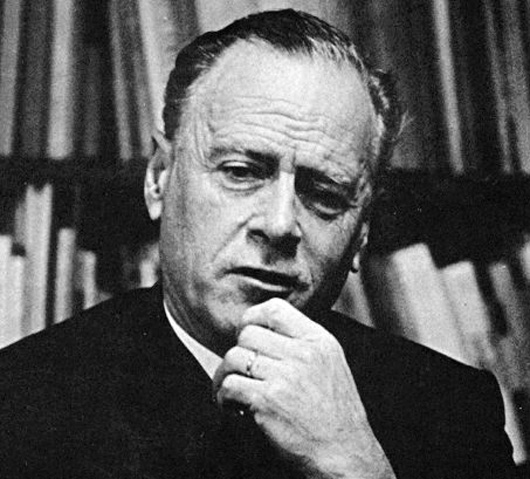

I have always struggled with the work of media theorist Marshall McLuhan. I find it to be equal parts confusing and compelling; it’s persuasive at times and then utterly perplexing elsewhere. I just can’t wrap my head around him and yet I can’t stop coming back to him.

I have always struggled with the work of media theorist Marshall McLuhan. I find it to be equal parts confusing and compelling; it’s persuasive at times and then utterly perplexing elsewhere. I just can’t wrap my head around him and yet I can’t stop coming back to him.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.