Articles by Anne Hobson

Anne Hobson is a Program Manager for Academic & Student Programs at the Mercatus Center at George Mason University. Previously, she worked as a technology policy fellow for the R Street Institute and a public policy associate at Facebook. Anne is currently pursuing a PhD in economics from George Mason University. She received her MA in applied economics from George Mason University and her BA in International Studies from Johns Hopkins University.

Anne Hobson is a Program Manager for Academic & Student Programs at the Mercatus Center at George Mason University. Previously, she worked as a technology policy fellow for the R Street Institute and a public policy associate at Facebook. Anne is currently pursuing a PhD in economics from George Mason University. She received her MA in applied economics from George Mason University and her BA in International Studies from Johns Hopkins University.

[Co-authored with Walter Stover]

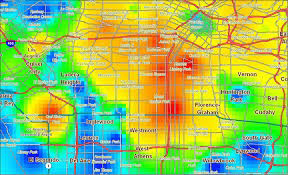

Artificial Intelligence (AI) systems have grown more prominent in both their use and their unintended effects. Just last month, LAPD announced that they would end their use of a predicting policing system known as PredPol, which had sustained criticism for reinforcing policing practices that disproportionately affect minorities. Such incidents of machine learning algorithms producing unintentionally biased outcomes have prompted calls for ‘ethical AI’. However, this approach focuses on technical fixes to AI, and ignores two crucial components of undesired outcomes: the subjectivity of data fed into and out of AI systems, and the interaction between actors who must interpret that data. When considering regulation on artificial intelligence, policymakers, companies, and other organizations using AI should therefore focus less on the algorithms and more on data and how it flows between actors to reduce risk of misdiagnosing AI systems. To be sure, applying an ethical AI framework is better than discounting ethics all together, but an approach that focuses on the interaction between human and data processes is a better foundation for AI policy.

The fundamental mistake underlying the ethical AI framework is that it treats biased outcomes as a purely technical problem. If this was true, then fixing the algorithm is an effective solution, because the outcome is purely defined by the tools applied. In the case of landing a man on the moon, for instance, we can tweak the telemetry of the rocket with well-defined physical principles until the man is on the moon. In the case of biased social outcomes, the problem is not well-defined. Who decides what an appropriate level of policing is for minorities? What sentence lengths are appropriate for which groups of individuals? What is an acceptable level of bias? An AI is simply a tool that transforms input data into output data, but it’s people that give meaning to data at both steps in context of their understanding of these questions and what appropriate measures of such outcomes are.

Continue reading →

– Coauthored with Anna Parsons

“Algorithms’ are only as good as the data that gets packed into them,” said Democratic Presidential hopeful Elizabeth Warren. “And if a lot of discriminatory data gets packed in, if that’s how the world works, and the algorithm is doing nothing but sucking out information about how the world works, then the discrimination is perpetuated.”

Warren’s critique of algorithmic bias reflects a growing concern surrounding our interaction with algorithms every day.

Algorithms leverage big data sets to make or influence decisions from movie recommendations to credit worthiness. Before algorithms, humans made decisions in advertising, shopping, criminal sentencing, and hiring. Legislative concerns center on bias – the capacity for algorithms to perpetuate gender bias, racial and minority stereotypes. Nevertheless, current approaches to regulating artificial intelligence (AI) and algorithms are misguided.

Continue reading →

–Coauthored with Mercatus MA Fellow Jessie McBirney

Flat standardized test scores, low college completion rates, and rising student debt has led many to question the bachelor’s degree as the universal ticket to the middle class. Now, bureaucrats are turning to the job market for new ideas. The result is a renewed enthusiasm for Career and Technical Education (CTE), which aims to “prepare students for success in the workforce.” Every high school student stands to benefit from a fun, rigorous, skills-based class, but the latest reauthorization of the Carl D. Perkins Act, which governs CTE at the federal level, betrays a faulty economic theory behind the initiative.

Modern CTE is more than a rebranding of yesterday’s vocational programs, which earned a reputation as “dumping grounds” for struggling students and, unfortunately, minorities. Today, CTE classes aim to be academically rigorous and cover career pathways ranging from manufacturing to Information Technology and STEM (science, technology, engineering, and mathematics). Most high school CTE occurs at traditional public schools, where students take a few career-specific classes alongside their core requirements.

Continue reading →

by Walter Stover and Anne Hobson

Franklin Foer’s article in the Atlantic on Jeff Bezos’s master plan offers insight into the mind of the famed CEO, but his argument that Amazon is all-powerful is flawed. Foer overlooks the role of consumers in shaping Amazon’s narrative. In doing so, he overestimates the actual autonomy of Bezos and the power of Amazon over its consumers.

The article falls prey to an atomistic theory of Amazon. The thinking goes like this: I am an atom, and Amazon is a (much) larger atom. Because Amazon is so much larger than I am, I need some intervening force to ensure that Amazon does not prey on me. This intervening force must belong to an even larger atom (the U.S. government) in order to check Amazon’s power. The atomistic lens sees individuals as interchangeable and isolated from each other, able to be considered one at a time.

Foer’s application of this theory appears in his treatment of Hayek, one of the staunchest opponents of aggregation and atomism. For example, when he summarizes Hayek’s paper “The Use of Knowledge in Society,” he phrases Hayek’s argument as that “…no bureaucracy could ever match the miracle of markets, which spontaneously and efficiently aggregate the knowledge of a society.” Hayek found the notion of aggregation highly problematic, as seen in another of his articles, “Competition as a Discovery Procedure,” in which he criticizes the idea of a “scientific” objective approach to measuring market variables. His argument against trying to build a science on macroeconomic variables notes that “…the coarse structure of the economy can exhibit no regularities that are not the results of the fine structure… and that those aggregate or mean values… give us no information about what takes place in the fine structure.”

Neither Amazon nor the market can aggregate the knowledge of a society. We can try to speak of the market in aggregate terms, but we end up summing up all of the differences between individuals and concealing the action and agency of the individuals at the bottom. We cannot speak of market activity without reference to the patterns of individual interactions. It is best to think of the market as an emergent, unintended outcome of a constellation of individual actors, not atoms, each of whom have different talents, wants, knowledge, and resources. Actors enter into exchanges with each other and form complicated, semi-rigid, multi-leveled social networks.

Continue reading →

– Coauthored with Mercatus MA Fellow Walter Stover

The advent of artificial intelligence technology use in dynamic pricing has given rise to fears of ‘digital market manipulation.’ Proponents of this claim argue that companies leverage artificial intelligence (AI) technology to obtain greater information about people’s biases and then exploit them for profit through personalized pricing. Those that advance these arguments often support regulation to protect consumers against information asymmetries and subsequent coercive market practices; however, such fears ignore the importance of the institutional context. These market manipulation tactics will not have a great effect precisely because they lack coercive power to force people to open their wallets. Such coercive power is a function of social and political institutions, not of the knowledge of people’s biases and preferences that could be gathered from algorithms.

As long as companies such as Amazon operate in a competitive market setting, they are constrained in their ability to coerce customers who can vote with their feet, regardless of how much knowledge they actually gather about those customers’ preferences through AI technology. Continue reading →

-Coauthored with Mercatus MA Fellow Walter Stover

Imagine visiting Amazon’s website to buy a Kindle. The product description shows a price of $120. You purchase it, only for a co-worker to tell you he bought the same device for just $100. What happened? Amazon’s algorithm predicted that you would be more willing to pay for the same device. Amazon and other companies before it, such as Orbitz, have experimented with dynamic pricing models that feed personal data collected on users to machine learning algorithms to try and predict how much different individuals are willing to pay. Instead of a fixed price point, now users could see different prices according to the profile that the company has built up of them. This has led the U.S. Federal Trade Commission, among other researchers, to explore fears that AI, in combination with big datasets, will harm consumer welfare through company manipulation of consumers to increase their profits.

The promise of personalized shopping and the threat of consumer exploitation, however, first supposes that AI will be able to predict our future preferences. By gathering data on our past purchases, our almost-purchases, our search histories, and more, some fear that advanced AI will build a detailed profile that it can then use to estimate our future preference for a certain good under particular circumstances. This will escalate until companies are able to anticipate our preferences, and pressure us at exactly the right moments to ‘persuade’ us into buying something we ordinarily would not.

Such a scenario cannot come to pass. No matter how much data companies can gather from individuals, and no matter how sophisticated AI becomes, the data to predict our future choices do not exist in a complete or capturable way. Treating consumer preferences as discoverable through enough sophisticated search technology ignores a critical distinction between information and knowledge. Information is objective, searchable, and gatherable. When we talk about ‘data’, we are usually referring to information: particular observations of specific actions, conditions or choices that we can see in the world. An individual’s salary, geographic location, and purchases are data with an objective, concrete existence that a company can gather and include in their algorithms.

Continue reading →

“You don’t gank the noobs” my friend’s brother explained to me, growing angrier as he watched a high-level player repeatedly stalk and then cut down my feeble, low-level night elf cleric in the massively multiplayer online roleplaying game World of Warcraft. He logged on to the server to his “main,” a high-level gnome mage and went in search of my killer, carrying out two-dimensional justice. What he meant by his exclamation was that players have developed a social norm banning the “ganking” or killing of low-level “noobs” just starting out in the game. He reinforced that norm by punishing the overzealous player with premature annihilation.

Ganking noobs is an example of undesirable social behavior in a virtual space on par with cutting people off in traffic or budging people in line. Punishments for these behaviors take a variety of forms, from honking, to verbal confrontation, to virtual manslaughter. Virtual reality social spaces, defined as fully artificial digital environments, are the newest medium for social interaction. Increased agency and a sense of physical presence within a VR social world like VRChat allows users to more intensely experience both positive and negative situations, thus reopening the discussion for how best to govern these spaces.

Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.