The Australian government has been running a trial of ISP-level filtering products to determine whether network-based filtering could be implemented by the government to censor certain forms of online content without a major degradation of overall network performance. The government’s report on the issue was released today: Closed-Environment Testing of ISP-Level Internet Content Filtering. It was produced by the Australian Communications & Media Authority (ACMA), which is the rough equivalent of the Federal Communications Commission here in the U.S., but with somewhat broader authority.

The Australian government has been investigating Internet filtering techniques for many years now and even gone so far to offered subsidized, government-approved PC-based filters through the Protecting Australian Families Online program. That experiment did not end well, however, as a 16-year old Australian youth cracked the filter within a half hour of its release. The Australian government next turned its attention to ISP-level filtering as a possible solution and began a test of 6 different network-based filters in Tasmania.

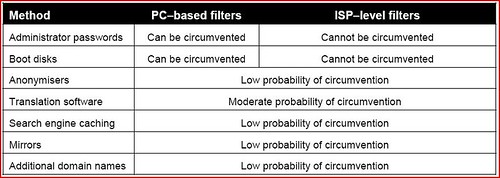

What makes ISP-level (network-based) filtering an attractive approach for many policymakers is that, at least in theory, it could solve the problem the Australian government faced with PC-based (client-side) filters: ISP-level filters are more difficult, if not impossible, to circumvent. That is, if you can somehow filter content and communications at the source–or within the network–then you have a much greater probability of stopping that content from getting through. Here’s a chart from the ACMA’s new report that illustrates what they see as the advantage of ISP-level filters:

Of course, that’s the theory and it remains to see how well it would work in practice. In today’s report, however, the ACMA claims that network-based filtering generally did work well enough in practice during the Tasmanian trial such that all 6 filters tested scored an 88% effectiveness rate in terms of blocking the content / URLs that the government was hoping would be blocked (and 3 of the products scored above 94%). The report also claims that overblocking of acceptable content was only 8% for all filters tested and 3% for four of the services. Finally, the ACMA said that network degradation was not nearly as big of a problem during this round of tests as it was during previous test, when performance degradation ranged from 75-98%. In this latest test, by contrast, the ACMA said degradation was down, but still varied widely—from a low of just 2% for one product to a high of 87% for another.

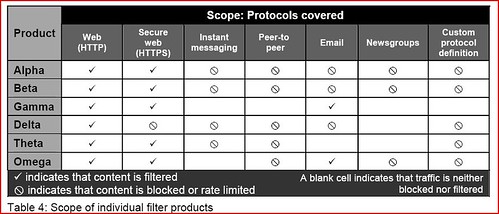

So, what to make of this report? There are a couple of interesting caveats in the report which raise some questions regarding overall effectiveness and feasibility of such ISP-level filtering being applied on a broader scale. For example, the report mentions of p. 45 that the scope of network filtering is mostly limited to HTTP and HTTPS. For other services and protocols, including peer-to-peer, IM, e-mail, newsgroups, or custom protocols, the services could generally not filter properly. The report notes, “No products are capable of distinguishing illegal content and content that may be regarded as inappropriate on non-web protocols, excepting two products that can identify particular types of content carried via one email protocol, and one product that can identify particular types of content carried via one streaming protocol.”

Of course, the filters could block those services and protocols outright, but that’s not a workable solution in the long-run, of course, since people demand those services. As the ACMA notes later in the report (on p. 53), “Where such protocols are used to carry legitimate traffic and are widely used by children for study and social interaction, ACMA regards the absence of a more targeted capability as a deficiency.” Indeed, that’s a pretty significant deficiency!

But here’s the more interesting question: Would a centralized filtering mandate by governments encourage people to shift content or communications to those other protocols or services, or encourage others to create new protocols or services that would be more likely to evade centralized ISP-filtering? I don’t want to overplay this point because it is certainly true that much (perhaps most) of what governments want censored today would likely be captured by such centralized filters. But what sort of filtering failure rate is acceptable, and what will happen in coming years as a result of such a mandate? I’m not saying I have any answers, but it is certainly worth exploring those questions.

More importantly, I have obviously not even gotten into the threshold question here about what sort of content or materials governments would deem “illegal” such that they would be filtered in the first place? That’s obviously a very significant and controversial question. Moreover, why should the decision to censor in such a sweeping fashion be transferred to the network-level instead of remaining at the individual household level?

Of course, governments might require that every ISP simply offer such a network filtering solution and then let individual households choose to opt-out of or to opt-in to the system. But who decides what is blocked at the headend under such a scheme? That determination is currently left to private filtering companies here in the U.S. and in many other countries, but the Australians have a different sort of regime and history when it comes to content regulation. They and other countries would likely be more comfortable making those determinations about acceptable content and then requiring the ISPs to act as deputized agents of speech and morality enforcement. And there’s no First Amendment in Australia to stop them from doing so.

Beyond such issues about the wisdom and scope of government censorship, there’s also the question of whether such centralized filtering poses other concerns about the extent of government authority. Many people will be put off by the prospect of national governments playing the role of national nanny via centralized network filters. (Fill in your own “Big Brother” or China analogy here). But many others will be left wondering what else such a move subsequently allows the government to do in terms of network snooping and surveillance. And there are other concerns here regarding the ongoing cost of the process (who pays for network upgrades?) and how else those resources might have been used.

But make no doubt about it folks, this debate is about to get red-hot here in the States as lawmakers increasingly look for new ways to ‘deputize the middleman’ and require greater policing of the Net by online intermediaries—and not just for ISPs. If ISPs are required to engage in such centralized filtering, then one can easily imagine how other online intermediaries—search providers, social networking sites, online gaming platform providers, other application vendors, etc—would be expected to play ball too, especially for all that user-generated content out there that could evade centralized ISP filters. If they don’t play ball, one could imagine the government seeking to impose some sort of liability on them. And that then opens a debate about the applicability of Sec. 230 of the Communications Decency Act, which has generally immunized intermediaries from such liability.

I hope our readers will provide additional thoughts and comments about the Australian scheme and centralized, network-based, mandatory ISP filtering more generally. Specifically, I am hoping some of our savvy readers can identify some of the technical hurdles standing in the way of such schemes, or what unintended consequences they might create.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.