It was my pleasure this week to be invited to deliver some comments at an event hosted by the Information Technology and Innovation Foundation (ITIF) to coincide with the release of their latest study, “The Privacy Panic Cycle: A Guide to Public Fears About New Technologies.” The goal of the new ITIF report, which was co-authored by Daniel Castro and Alan McQuinn, is to highlight the dangers associated with “the cycle of panic that occurs when privacy advocates make outsized claims about the privacy risks associated with new technologies. Those claims then filter through the news media to policymakers and the public, causing frenzies of consternation before cooler heads prevail, people come to understand and appreciate innovative new products and services, and everyone moves on.” (p. 1)

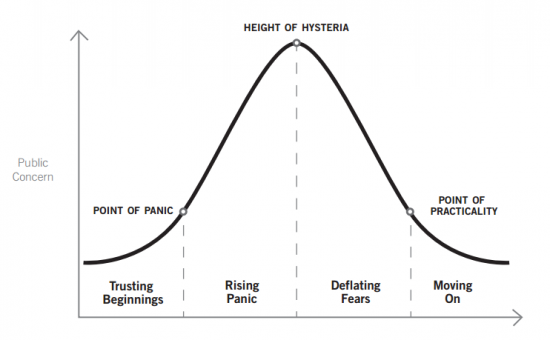

As Castro and McQuinn describe it, the privacy panic cycle “charts how perceived privacy fears about a technology grow rapidly at the beginning, but eventually decline over time.” They divide this cycle into four phases: Trusting Beginnings, Rising Panic, Deflating Fears, and Moving On. Here’s how they depict it in an image:

The report can be seen as an extension of the literature on “moral panics” and “techno-panics.” Some relevant texts in this field include Stanley Cohen’s Folk Devils and Moral Panics, Erich Goode and Nachman Ben-Yehuda’s Moral Panics: The Social Construction of Deviance, Cass Sunstein’s Laws of Fear, and Barry Glassner’s Culture of Fear. But there’s a rich body of academic writing on this topic and I’ve tried to make a small contribution to this literature in recent years, most notably with a lengthy 2013 law review article, “Technopanics, Threat Inflation, and the Danger of an Information Technology Precautionary Principle.” In that paper, I try to connect the literature on moral panic theory (which mostly focuses on panics about speech and cultural changes) to other scholarship about how panics and threat inflation are used in many other contexts, including the fields of national security policy, cybersecurity, and more.

I define “technopanic,” as “intense public, political, and academic responses to the emergence or use of media or technologies, especially by the young.” “Threat inflation” has been defined by national security policy experts Jane K. Cramer and A. Trevor Thrall as “the attempt by elites to create concern for a threat that goes beyond the scope and urgency that a disinterested analysis would justify.”

Castro and McQuinn’s new study on privacy panic cycles fits neatly within this analytical framework and makes an important contribution to the literature. They warn of the real dangers associated with these privacy panics, especially in terms of lost opportunities for innovation. “Policymakers should not get caught up in the panics that follow in the wake of new technologies,” they argue, “and they should not allow hypothetical, speculative, or unverified claims to color the policies they put in place. Similarly, they should not allow unsubstantiated claims put forth by privacy fundamentalists to derail legitimate public sector efforts to use technology to improve society,” they say. (p. 28)

I think one of the most important takeaways from the study is that, as Castro and McQuinn note, “history has shown, many of the overinflated claims about loss of privacy have never materialized.” (p. 28) They identify many reasons why that may be the case but, most notably, they explain how societal attitudes often quickly adjust and also that “social norms dissuade many practices that are feasible but undesirable.” (p. 28) I have spent a lot of time thinking through this process of individual and social acclimation to new technologies and, most recently, wrote an essay on this topic entitled, “Muddling Through: How We Learn to Cope with Technological Change.”

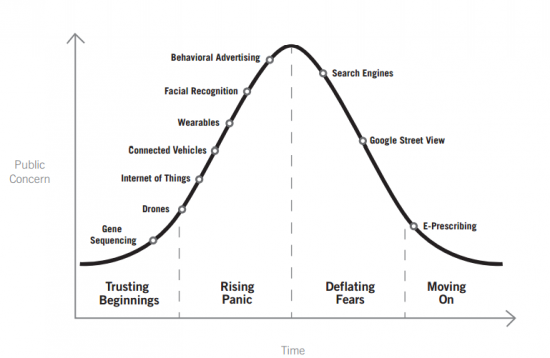

Castro and McQuinn highlight several historical case studies that illustrate how privacy panics play out in practice. They include studies of photography, the transistor, and RFID tags. They also continue on to map out how various new technologies are currently—or might soon be—experiencing a privacy panic. Those include drones, facial recognition, connected cars, behavioral advertising, the Internet of Things and wearable tech. Here’s where Castro and McQuinn believe each of those technologies falls currently on the privacy panic curve.

One problem with the ITIF report, however, is that it avoids the question of what constitutes a serious enough privacy “harm” that might be worth actually panicking over. Certainly there must be something that deserves special concern – perhaps even a little bit of panic. Of course, as I noted in my remarks at the event, this is problem with a great deal of literature in this field due to the challenge associated with defining what we even mean by “privacy” or “privacy harm.” Nonetheless, while some privacy fundamentalists are far too aggressive in using amorphous conceptions of privacy harms to fuel privacy panics, it can also be the case that others (like Castro, McQuinn, and myself) don’t do enough to specify when extremely serious privacy problems exist that warrant heightened concern.

The ITIF report rightly singles out the many groups that all too often use fear tactics and threat inflation to advance their own agendas. In the academic literature on moral panics, these people or groups are referred to as “fear entrepreneurs.” They hope to create and then take advantage of a state of fear to demand that “something must be done” about supposed problems that are often either greatly overstated or which will be solved (or just go away) over time. (For more on “fear entrepreneurs,” see Frank Furedi’s outstanding 2009 article on “Precautionary Culture and the Rise of Probabilistic Risk Assessment.”) These individuals and groups often end up having a disproportionate impact on policy debates and, through their vociferous activism, threaten to achieve a sort of “heckler’s veto” over digital innovation.

However, as I stressed in my remarks at ITIF’s launch event for the study, I believe that Castro and McQuinn were wrong to single out the International Association of Privacy Professionals (IAPP) as one of these troublemakers. Castro and McQuinn claim that “there is now a professional class of people whose job is to manage privacy risks and promote the idea that technology is becoming more invasive. These privacy professionals have a vested interest in inflating the perceived privacy risk of new technologies as their livelihood depends on businesses’ willingness to pay them to address these concerns.” (8)

I think that mischaracterizes the role that most IAPP-trained privacy professionals play today. I have done a lot of work with IAPP itself and many of the privacy professionals they have trained. In my experience, these folks aren’t trying to fan the flames of “privacy panics.” To the contrary, many (perhaps most) IAPP professionals are actively involved in putting out those fires or making sure that they do not start raging in the first place. This is particularly true of the huge number of IAPP-trained privacy professionals who work for major technology companies and who work hard every day to find practical solutions to real-world privacy and security-related concerns.

Of course, as with any large membership organization, one can find some IAPP-trained privacy professionals who may indeed be guilty of fueling privacy panics for personal or organizational purposes. After all, some IAPP-trained folks work for privacy advocacy organizations which could be classified as “privacy fundamentalists” in their philosophical orientation. But just because some IAPP-trained people play techno-panic games, it certainly doesn’t mean that most of them do.

Relatedly, another small nitpick I have with the ITIF study is that it groups together a large number of privacy and security-focused tech policy groups and implies that they are all equally guilty of fueling privacy panics. In reality, there is a small core group of individuals and advocacy organizations who are far more vociferous and extreme in their privacy panic rhetoric. Others may be guilty of that at times, but not nearly to the same extent as the most panicky Chicken Littles.

The only other problem I had with the study, and this is really quite a small matter, is that I would have liked to have seen some discussion about some strategies we might be able to employ to help counter privacy panics, or lessen the likelihood that they develop at all. In my own work, I have tried to develop constructive solutions to privacy and security-related concerns that might give rise to panics. Those solutions include things like education and tech literacy efforts, empowerment tools, transparency efforts, and so on. It’s also worth reminding concerned critics that there exists a broad range of existing legal remedies that can help address privacy concerns after the fact. These include torts and common law solutions, contractual remedies, class actions, other targeted legal solutions, and enforcement of “unfair and deceptive practices” by the Federal Trade Commission or state attorneys general. And there’s also important industry self-regulatory efforts and best practices that can help alleviate many of these privacy concerns. I would have liked to have seen the ITIF study address these or other potential solutions to privacy panics.

Overall, however, I thought that the ITIF report makes an important contribution to the literature in this field and provides us with a useful analytic framework to help us evaluate and critique privacy-related technopanics in the future.

The video of the launch event is below and the full paper can be found here. Also, for further reading on technopanics, see my compendium of 40 essays I have written on the topic.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.