At the last possible moment before the Christmas holiday, the FCC published its Report and Order on “Preserving the Open Internet,” capping off years of largely content-free “debate” on the subject of whether or not the agency needed to step in to save the Internet.

At the last possible moment before the Christmas holiday, the FCC published its Report and Order on “Preserving the Open Internet,” capping off years of largely content-free “debate” on the subject of whether or not the agency needed to step in to save the Internet.

In the end, only FCC Chairman Julius Genachowski fully supported the final solution. His two Democratic colleagues concurred in the vote (one approved in part and concurred in part), and issued separate opinions indicating their belief that stronger measures and a sounder legal foundation were required to withstand likely court challenges. The two Republican Commissioners vigorously dissented, which is not the norm in this kind of regulatory action. Independent regulatory agencies, like the U.S. Courts of Appeal, strive for and generally achieve consensus in their decisions. Continue reading →

I was quoted this morning in Sara Jerome’s story for The Hill on the weekend seizures of domain names the government believes are selling black market, counterfeit, or copyright infringing goods.

I was quoted this morning in Sara Jerome’s story for The Hill on the weekend seizures of domain names the government believes are selling black market, counterfeit, or copyright infringing goods.

The seizures take place in the context of an on-going investigation where prosecutors make purchases from the sites and then determine that the goods violate trademarks or copyrights or both.

Several reports, including from CNET, The Washington Post and Techdirt, wonder how it is the government can seize a domain name without a trial and, indeed, without even giving notice to the registered owners.

The short answer is the federal civil forfeiture law, which has been the subject of increasing criticism unrelated to Internet issues. (See http://law.jrank.org/pages/1231/Forfeiture-Constitutional-challenges.html for a good synopsis of recent challenges, most of which fail.) Continue reading →

Inspired by thoughtful pieces by Mike Masnick on Techdirt and L. Gordon Crovitz’s column yesterday in The Wall Street Journal, I wrote a perspective piece this morning for CNET regarding the European Commission’s recently proposed “right to be forgotten.”

Inspired by thoughtful pieces by Mike Masnick on Techdirt and L. Gordon Crovitz’s column yesterday in The Wall Street Journal, I wrote a perspective piece this morning for CNET regarding the European Commission’s recently proposed “right to be forgotten.”

A Nov. 4th report promises new legislation next year “clarifying” this right under EU law, suggesting not only that the Commission thinks it’s a good idea but, even more surprising, that it already exists under the landmark 1995 Privacy Directive.

What is the “right to be forgotten”? The report is cryptic and awkward on this important point, describing “the so-called ‘right to be forgotten’, i.e. the right of individuals to have their data no longer processed and deleted when they [that is, the data] are no longer needed for legitimate purposes.”

Continue reading →

This week, we’ve seen reports in both The New York Times (“Stage Set for Showdown on Online Privacy“) and The Wall Street Journal (“Watchdog Planned for Online Privacy“) that the Obama Administration is inching closer toward adopting a new Internet regulatory regime in the name of protecting privacy online. In this essay, I want to talk about information control regimes, not from a normative perspective, but from a practical one. In doing so, I will compare the relative complexities associated with controlling various types of information flows to protect against four theoretical information harms: objectionable content, defamation, copyright, and privacy.

From a normative perspective, there are many arguments for and against various forms of information control. Here, for example, are the reasons typically given for why society might want to impose regulations on the Internet (or other communications channels) to address each of the four issues identified above:

- Content control / Censorship: We must control information flows to protect children from objectionable content or all citizens against some other form of supposedly harmful speech (hate speech, terrorist recruitment, etc).

- Defamation control: We must control information flows to protect people’s reputations.

- Copyright control: We must control information flows to protect the property rights of creators against unauthorized use / distribution.

- Privacy control: We must control information flows to protect against information flows that include information about individuals.

Again, there are plenty of good normative arguments in the opposite direction, many of which are based on free speech considerations since, by definition, information control regimes limit the flow of forms of speech. For privacy, I discussed such speech-related considerations in my essay on “Two Paradoxes of Privacy Regulation.” But what about the administrative or enforcement burdens associated with each form of information control? I increasingly find that question as interesting as the normative considerations.

Continue reading →

If I ever had any hope of “keeping up” with developments in the regulation of information technology—or even the nine specific areas I explored in The Laws of Disruption—that hope was lost long ago. The last few months I haven’t even been able to keep up just sorting the piles of printouts of stories I’ve “clipped” from just a few key sources, including The New York Times, The Wall Street Journal, CNET News.com and The Washington Post.

I’ve just gone through a big pile of clippings that cover April-July. A few highlights: In May, YouTube surpassed 2 billion daily hits. Today, Facebook announced it has more than 500,000,000 members. Researchers last week demonstrated technology that draws device power from radio waves.

Continue reading →

Today, China renewed Google’s license to do business in the country, reports The Washington Post. The announcement means that Google will maintain its presence in the country for the foreseeable future. Google will likely meet criticism, but this is good news nonetheless for Chinese Internet users.

The rapidly unfolding Google-China saga has made headline after headline since January, when Google announced that it had suffered an intrusion originating in China. In March, after months of internal debate and heavy public criticism, Google shut down its China-based search engine Google.cn, redirecting all queries to its Hong Kong-based Google.com.hk site. Late last month, Google reactivated some of its China-based services and has continued to operate in China, albeit on a limited basis.

Operating in China has long been a headache for Google, due to the Chinese government’s notorious disregard for Internet freedom, embodied by its infamous “Great Firewall of China.” China surveils all Internet traffic that traverses its borders and attempts to block its citizens from accessing information sources which the government considers unfavorable. China also gleans data from its network to identify and retaliate against political dissidents.

Human rights advocates have long derided Google and other U.S. tech companies, such as Microsoft and Yahoo, for doing business in China. China requires all search engines operating in the country to censor a broad range of information, like photos of the 1989 Tiananmen Square massacre. Critics contend that complying with the Chinese government’s oppressive demands is unethical and that facilitating censorship and suppression is morally unacceptable on its face.

Such criticisms, however principled, miss the forest for the trees. If Google were to cease its Chinese operations entirely, the result would be one less U.S. Internet firm accessible to Chinese citizens. While Google is the worldwide search leader, in the Chinese search market Google lags behind Baidu, a search company based in China. Baidu’s market share increased after Google shut down its China-based search site. If Google were to pull out of China entirely, chances are Baidu would pick up many more users.

Continue reading →

NY venture capitalist Fred Wilson notes eight advantages of using the iPhone’s Safari browser over iPhone apps to access content. Fred’s arguments seem pretty sound to me and help to illustrate the point I was trying to make a few months ago in a heated exchange over Adam’s post on Apple’s App Store, Porn & “Censorship”: Although Apple restricts pornographic apps, it does not restrict what iPhone (or iPad or iTouch) users can access on their browsers. (And it’s not censorship, anyway, because that’s what governments do!)

As I noted in that exchange, the main practical advantage of apps right now over the browser seems to be the ability to play videos from websites that require Flash—which is especially useful for porn! Apple has rejected using Flash on the iPhone on technical grounds, in favor of HTML5, which will allow websites to display video without Flash—including on mobile devices. But once HTML5 is implemented (large scale adoption expected in 2012), this primary advantage of apps over mobile Safari will disappear: Users will be able to view porn on their browsers without needing to rely on apps—and Apple’s control over apps based on their content will no longer matter so much, if at all.

Of course, it may take several more years for HTML5 to really become the standard, but what matters is that all Apple products, including mobile Safari, already support HTML5. So it’s just a question of when porn sites move from Flash to HTML5. That seems already to be happening, with major porn publishers already starting the transition. The main stumbling block seems to be HTML5 support from the other browser makers. But Internet Explorer 9 supports HTML5, and is expected out early in 2011 with a beta version due out this August. Mozilla’s Firefox 4.0 (formerly 3.7) also promises HTML5 support and is due out this November. Since porn publishers have always been on the cutting edge of implementing new web technologies, I’d bet we’ll start seeing many porn sites move to HTML5 by this Christmas. And by Christmas 2011, as we all sit around the fire with Grandma sipping eggnog and enjoying our favorite adult websites on our overpriced-but-elegant Apple products loading in HTML5 in the Safari browser, we’ll all look back and wonder why anyone made such a big deal about Apple restricting porn apps.

Oh, and if you get tired of waiting, get an Android phone! Anyway, here are my comments on Adam’s February post: Continue reading →

Not surprisingly, FCC Commissioners voted 3 to 2 today to open a Notice of Inquiry on changing the classification of broadband Internet access from an “information service” under Title I of the Communications Act to “telecommunications” under Title II. (Title II was written for telephone service, and most of its provisions pre-date the breakup of the former AT&T monopoly.) The story has been widely reported, including posts from The Washington Post, CNET, Computerworld, and The Hill.

Not surprisingly, FCC Commissioners voted 3 to 2 today to open a Notice of Inquiry on changing the classification of broadband Internet access from an “information service” under Title I of the Communications Act to “telecommunications” under Title II. (Title II was written for telephone service, and most of its provisions pre-date the breakup of the former AT&T monopoly.) The story has been widely reported, including posts from The Washington Post, CNET, Computerworld, and The Hill.

As CNET’s Marguerite Reardon counts it, at least 282 members of Congress have already asked the FCC not to proceed with this strategy, including 74 Democrats.

I have written extensively about why a Title II regime is a very bad idea, even before the FCC began hinting it would make this attempt. I’ve argued that the move is on extremely shaky legal grounds, usurps the authority of Congress in ways that challenge fundamental Constitutional principles of agency law, would cause serious harm to the Internet’s vibrant ecosystem, and would undermine the Commission’s worthy goals in implementing the National Broadband Plan. No need to repeat any of these arguments here. Reclassification is wrong on the facts, and wrong on the law. Continue reading →

I was interviewed yesterday for the local Fox affiliate on Cal. SB 1411, which criminalizes online impersonations (or “e-personation”) under certain circumstances.

I was interviewed yesterday for the local Fox affiliate on Cal. SB 1411, which criminalizes online impersonations (or “e-personation”) under certain circumstances.

On paper, of course, this sounds like a fine idea. As Palo Alto State Senator Joe Simitian, the bill’s sponsor, put it, “The Internet makes many things easier. One of those, unfortunately, is pretending to be someone else. When that happens with the intent of causing harm, folks need a law they can turn to.”

Or do they?

The Problem with New Laws for New Technology

SB1411 would make a great exam question of short paper assignment for an information law course. It’s short, is loaded with good intentions, and on first blush looks perfectly reasonable—just extending existing harassment, intimidation and fraud laws to the modern context of online activity. Unfortunately, a careful read reveals all sorts of potential problems and unintended consequences.

Continue reading →

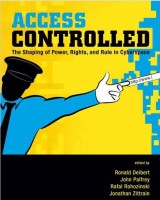

Faithful readers know of my geeky love for tech policy books. I read lots of ’em. There’s a steady stream of Amazon.com boxes that piles up on my doorstop some days because my mailman can’t fit them all in my mailbox. But I go pretty hard on all the books I review. It’s rare for me pen a glowing review. Occasionally, however, a book will come along that I think is both worthy of your time and which demands a place on your bookshelf because it is such an indispensable resource. Access Controlled: The Shaping of Power, Rights, and Rule in Cyberspace is one of those books.

Faithful readers know of my geeky love for tech policy books. I read lots of ’em. There’s a steady stream of Amazon.com boxes that piles up on my doorstop some days because my mailman can’t fit them all in my mailbox. But I go pretty hard on all the books I review. It’s rare for me pen a glowing review. Occasionally, however, a book will come along that I think is both worthy of your time and which demands a place on your bookshelf because it is such an indispensable resource. Access Controlled: The Shaping of Power, Rights, and Rule in Cyberspace is one of those books.

Smartly organized and edited by Ronald J. Deibert, John G. Palfrey, Rafal Rohozinski, and Jonathan Zittrain, Access Controlled is essential reading for anyone studying the methods governments are using globally to stifle online expression and dissent. As I noted of their previous edition, Access Denied: The Practice and Policy of Global Internet Filtering, there is simply no other resource out there like this; it should be required reading in every cyberlaw or information policy program.

The book, which is a project of the OpenNet Initiative (ONI), is divided into two parts. Part 1 of the book includes six chapters on “Theory and Analysis.” They are terrifically informative essays, and the editors have made them all available online here (I’ve listed them down below with links embedded). The beefy second part of the book provides a whopping 480 pages(!) of detailed regional and country-by-country overviews of the global state of online speech controls and discuss the long-term ramifications of increasing government meddling with online networks.

In their interesting chapter on “Control and Subversion in Russian Cyberspace,” Deibert and Rohozinski create a useful taxonomy to illustrate the three general types of speech and information controls that states are deploying today. What I find most interesting is how, throughout the book, various authors document the increasing movement away from “first generation controls,” which are epitomized by “Great Firewall of China”-like filtering methods, and toward second- and third-generation controls, which are more refined and difficult to monitor. Here’s how Deibert and Rohozinski define those three classes (or “generations”) of controls: Continue reading →

At the last possible moment before the Christmas holiday, the FCC published its Report and Order on “Preserving the Open Internet,” capping off years of largely content-free “debate” on the subject of whether or not the agency needed to step in to save the Internet.

At the last possible moment before the Christmas holiday, the FCC published its Report and Order on “Preserving the Open Internet,” capping off years of largely content-free “debate” on the subject of whether or not the agency needed to step in to save the Internet.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.