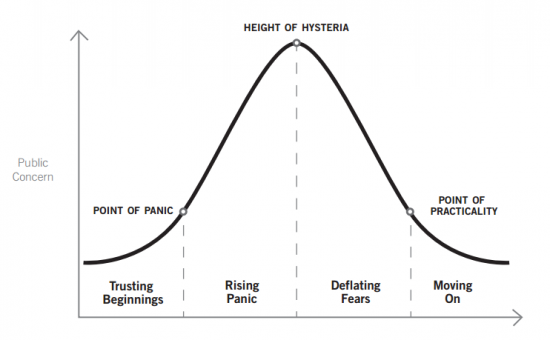

Contemporary tech criticism displays an anti-nostalgia. Instead of being reverent for the past, anxiety about the future abounds. In these visions, the future is imagined as a strange, foreign land, beset with problems. And yet, to quote that old adage, tomorrow is the visitor that is always coming but never arrives. The future never arrives because we are assembling it today.

The distance between the now and the future finds its hook in tech policy in the pacing problem, a term describing the mismatch between advancing technologies and society’s efforts to cope with them. Vivek Wadhwa explained that, “We haven’t come to grips with what is ethical, let alone with what the laws should be, in relation to technologies such as social media.” In The Laws of Disruption, Larry Downes explained the pacing problem like this: “technology changes exponentially, but social, economic, and legal systems change incrementally.” Or, as Adam Thierer wondered, “What happens when technological innovation outpaces the ability of laws and regulations to keep up?”

Here are three short responses. Continue reading →

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.