The way Ben Kunz puts it in a new Business Week article, “Each device contains its own widening universe of services and applications, many delivered via the Internet. They are designed to keep you wedded to a particular company’s ecosystem and set of products.”

I like Ben’s article a lot because it recognizes that “ walling off” and a “widening universe” are not mutually exclusive. If only policymakers and regulators acknowledged that. They must know it, but admitting it means acknowledging their limited relevance to consumer well-being and a need to step aside. So they feign ignorance.

walling off” and a “widening universe” are not mutually exclusive. If only policymakers and regulators acknowledged that. They must know it, but admitting it means acknowledging their limited relevance to consumer well-being and a need to step aside. So they feign ignorance.

Many claim to worry about the rise of proprietary services (I, as you can probably tell, often doubt their sincerity) but I’ve always regarded a “Splinternet” as a good thing that means more, not less, communications wealth. I first wrote about this in Forbes in 2000 when everyone was fighting over spam, privacy, content regulation, porn and marketing to kids.

Increasing wealth means a copy-and-paste world for content across networks, and it means businesses will benefit from presence across many of tomorrow’s networks, generating more value for future generations of consumers and investors. We won’t likely talk of an “Internet” with a capital-“I” and a reverent tremble the way we do now, because what matters is not the Internet as it happens to look right now, but underlying Internet technology that can just as easily erupt everywhere else, too.

Meanwhile, new application, device and content competition within and across networks disciplines the market process and “regulates” things far better than the FCC can. Yet the FCC’s very function is to administer or artificially direct proprietary business models, which it must continue to attempt to do (and as it pleads for assistance in doing in the net neutrality rulemaking) if it is going to remain relevant. I described the urgency of stopping the agency’s campaign recently in “Splinternets and cyberspaces vs. net neutrality,” and also in the January 2010 comments to the FCC on net neutrality.

Continue reading →

Progress Snapshot 6.6, The Progress & Freedom Foundation (PDF)

Mobile broadband speeds (at the “core” of wireless networks) are about to skyrocket—and revolutionize what we can do on-the-go online (at the “edge”). Consider four recent stories:

- Networks: MobileCrunch notes that Verizon will begin offering 4G mobile broadband service (using Long Term Evolution or LTE) “in up to 60 markets by mid-2012″—at an estimated 5-12 Mbps down and 2-5 Mbps up, LTE would be faster than most wired broadband service.

- Devices: Sprint plans to launch its first 4G phone (using WiMax, a competing standard to LTE) this summer.

- Applications: Google has finally released Google Earth for the Nexus One smartphone on T-Mobile, the first to run Google’s Android 2.1 operating system.

- Content: In November, Google announced that YouTube would begin offering high-definition 1080p video, including on mobile devices.

While the Nexus One may be the first Android phone with a processor powerful enough to crunch the visual awesomeness that is Google Earth, such applications will still chug along on even the best of today’s 3G wireless networks. But combine the ongoing increases in mobile device processing power made possible by Moore’s Law with similar innovation in broadband infrastructure, and everything changes: You can run hugely data-intensive apps that require real-time streaming, from driving directions with all the rich imagery of Google Earth to mobile videoconferencing to virtual world experiences that rival today’s desktop versions to streaming 1080p high-definition video (3.7+ Mbps) to… well, if I knew, I’d be in Silicon Valley launching a next-gen mobile start-up!

This interconnection of infrastructure, devices and applications should remind us that broadband isn’t just about “big dumb pipes”—especially in the mobile environment, where bandwidth is far more scarce (even in 4G) due to spectrum constraints. Network congestion can spoil even the best devices on the best networks. Just ask users in New York City, where AT&T has apparently just stopped selling the iPhone online in order to try to relieve AT&T’s over-taxed network under the staggering bandwidth demands of Williamsburg hipsters, Latter-Day Beatniks from the Village, Chelsea boys, and Upper West Side Charlotte Yorks all streaming an infinite plethora of YouTube videos and so on. Continue reading →

Today’s The Wall Street Journal Europe published an editorial that Alberto Mingardi of Istituto Bruno Leoni and I penned about the competition complaints brought against Google in Europe.

If policy makers set the terms in a primitive year like 2010, nobody will have to respond to Google.

By WAYNE CREWS AND ALBERTO MINGARDI

Google isn’t a monopoly now, but the more it tries to become one, the better it will be for us all. Competition works in this way: Capitalist enterprises strive to gain in profits and market share. In turn, competitors are forced to respond by trying to improve their offerings. Innovation is the healthy output of this competitive game. The European Commission, while pondering complaints against the Internet search giant, might consider this point.

Google has been challenged by a German, a British, and a French Web site, for its dominant position in the market for Web search and online advertisement. The U.S. search engine is said to be imposing difficult terms and conditions on competitors and partners, who are now calling regulators into action. Google’s search algorithm is accused of being “biased” by business partners and competing publishers alike.

Before resorting to the old commandments of antitrust, we should consider that the Internet world is still largely impervious and unknown to anybody—including regulators. We are in terra incognita, and nobody knows the likely evolution of the market. But one thing is for sure: Online search can’t evolve properly if it’s improperly regulated—no matter the stage of evolution.

Continue reading →

So, do I need to remind everyone of my ongoing rants about Jonathan Zittrain’s misguided theory about the death of digital generativity because of the supposed rise of “sterile, tethered” devices? I hope not, because even I am getting sick of hearing myself talk about it. But here again anyway is the obligatory listing of all my tirades: 1, 2, 3, 4, 5, 6, 7, 8 + video and my 2-part debate with Lessig and him last year.

You will recall that the central villain in Zittrain’s drama The Future of the Internet and How to Stop It is big bad Steve Jobs and his wicked little iPhone. And then, more recently, Jonathan has fretted over those supposed fiends at Facebook. Zittrain’s worries that “we can get locked into these platforms” and that “markets [will] coalesce [around] these tamer gated communities,” making it easier for both corporations and governments to control us. More generally, Zittrain just doesn’t seem to like that some people don’t always opt for the same wide open general purpose PC experience that he exalts as the ideal. As I noted in my original review of his book, Jonathan doesn’t seem to appreciate that it may be perfectly rational for some people to seek stability and security in digital devices and their networking experiences—even if they find those solutions in the form of “tethered appliances” or “sterile” networks, to use his parlance.

Every once and awhile I find a sharp piece by someone out there who is willing to admit that they see nothing wrong with such “closed” platforms or devices, or they even argue that those approaches can be superior to the more “open” devices and platforms out there. That’s the case with this Harry McCracken rant over at Technologizer today with the entertaining title, “The Verizon Droid is a Loaf of Day-Old Bread.” McCracken goes really hard on the Droid — which hurts because I own one! — and I’m not sure I entirely agree with his complaint about it, but what’s striking is how it represents the antithesis of Zittrainianism: Continue reading →

Until recently, Amazon and its Kindle were the only real e-reader game in town. This allowed them to force on publishers an arguably arbitrary (and low) price of $9.99 for bestsellers. With the introduction of Apple’s iPad, however, publishers now have a viable competitor to which they can defect. The result will likely be higher e-book prices in the near term, and this has prompted some point out that this is a case where more competition resulted in higher prices for consumers.

The key phrase in the previous paragraph, however, is “near term.” It’s interesting to see that five years after it began offering video in the iTunes store, Apple is apparently pushing TV producers to lower their prices by half from $1.99 an episode to 99¢. Market processes–especially those surrounding new technology and distribution channels–can be less than instantaneous, but they have a way of ultimately conforming to economic reality.

Reporting on the ongoing negotiations with Apple, the New York Times says, “Television production is expensive, and the networks are wary of selling shows for less.” But the economic reality they’re missing is that TV production is a fixed cost, and as my friend Tim Lee has pointed out many times, the marginal cost of digital distribution is basically zero. As a result, I wouldn’t be surprised if five years from now, we’ll see Apple badgering book publishers to cut their prices in half.

At least that’s how my former colleague Tom Miller, now at the American Enterprise Institute, used to put it. Still another government/business funded report, this one called “Nanotechnology: a UK Industry View” reaches yet again the same conclusions about nanotechnology as the ones that pop out occasionally like the U.S. Environmental Protection Agency’s “Nanotechnology White Paper” or the Food and Drug Administration’s “Nanotechnology.”

The conclusions always secure an open-ended role for political bodies to govern private endeavors, and since the business parties are so dependent on political funding, they have to go along with it, cut off from envisioning an alternative approach.

The reports say–brace for it–that governments should fund nanotechnology and study nanotechnology’s risks; and that they should then regulate the technology’s undefined and unknown risks besides. This approach, so different from, say, the way software is produced and marketed, assures that there will never be a “Bill Gates of nanotechnology” (or in another sector, a Bill Gates of biotechnology, as CEI’s Fred Smith often puts it). If every single new advance requires FDA medical-device-style approvals, this is an industry that cannot begin to reach its potential. Continue reading →

Mashable has reported that “The Internet” has made the list of Nobel Peace Prize nominees this year. This prize has already had its fair share of controversial and sometimes even comical nominees and recipients, but this sort of nomination is disappointing in a whole different way—it ignores the fact that individual human beings actually invented the technology that created the Internet.

Mashable has reported that “The Internet” has made the list of Nobel Peace Prize nominees this year. This prize has already had its fair share of controversial and sometimes even comical nominees and recipients, but this sort of nomination is disappointing in a whole different way—it ignores the fact that individual human beings actually invented the technology that created the Internet.

The sentiment behind this nomination, popularized by Italy’s version of Wired, is understandable. The Internet has had such an effect on the world in such a short amount of time its impossible to calculate the enormity of its effects on science, the arts, or politics. It has generated a mountainous amount of wealth, exposed the barbarism of tyrannical regimes worldwide, and has made more knowledge accessible to more people than ever before.

But people like Tim Berners-Lee or Roberty Taylor should be considered for the prize given their tremendous contributions to Internet technology. Both Berners-Lee or Taylor have already been recognized for their contributions to technological progress—Berners-Lee has an alphabet soup of honor-related suffixes after his name—but awarding the Nobel Prize isn’t just about accolades, it’s also about money. The 2009 prizes were roughly $1.4 million each, which would be a nice sum for a foundation dedicated to the advancement of Internet technologies, like Berners-Lee’s World Wide Web Foundation. When considering this, its clear that awarding the prize to an individual would do a lot more good than if the concept or idea of the Internet received the prize.

Even so, Web 2.0 evangelists, prominent intellectuals, and even 2003 Nobel Peace Prize winner Shirin Ebadi have backed the notion of the prize being awarded to the Internet itself—a new campaign is calling this “A Nobel for Each and Every One of Us.” While the power of the Internet does indeed flow from its uniting “each and every one of us,” the technology that allowed this miracle to exist was invented by people like Berners-Lee and Taylor who dedicated years of their lives to the advancement of human understanding. Even in this era of wise crowds, social networks, and “collective intelligence,” this sort of individual accomplishment should be recognized.

If you’d like to nominate any other person involved in the advancement of Internet technology for the Peace Prize, please drop a name in the comments.

I’ve always generally agreed with the conventional wisdom about micropayments as a method of funding online content or services: Namely, they won’t work. Clay Shirky, Tim Lee, and many others have made the case that micropayments face numerous obstacles to widespread adoption. The primary issue seems to be the “mental transaction cost” problem: People don’t want to be diverted–even for just a few seconds–from what they are doing to pay a fee, no matter how small. [That is why advertising continues to be the primary monetization engine of the Internet and digital services.]

That being said, I keep finding examples of how micropayments do work in some contexts and it has kept me wondering if there’s still a chance for micropayments to work in other contexts (like funding media content). For example, I mentioned here before how shocked I was when I went back and looked at my eBay transactions for the past couple of years and realized how many “small-dollar” purchases I had made via PayPal (mostly dumb stickers and other little trinkets). And the micropayment model also seems to be doing reasonably well in the online music world. In January 2009, Apple reported that the iTunes Music Store had sold over 6 billion tracks.

That being said, I keep finding examples of how micropayments do work in some contexts and it has kept me wondering if there’s still a chance for micropayments to work in other contexts (like funding media content). For example, I mentioned here before how shocked I was when I went back and looked at my eBay transactions for the past couple of years and realized how many “small-dollar” purchases I had made via PayPal (mostly dumb stickers and other little trinkets). And the micropayment model also seems to be doing reasonably well in the online music world. In January 2009, Apple reported that the iTunes Music Store had sold over 6 billion tracks.

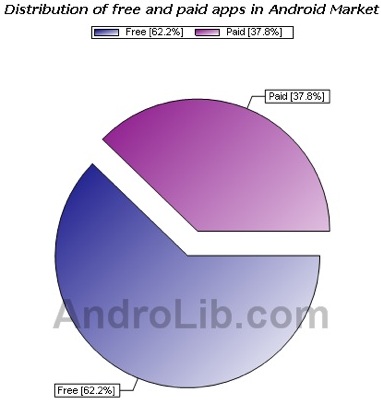

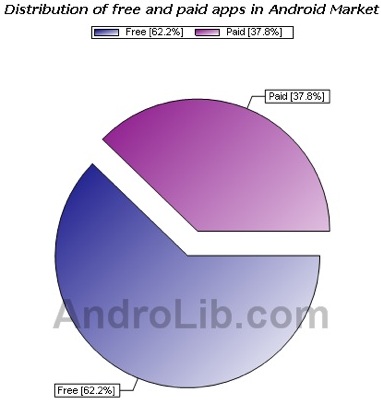

And then there are mobile application stores. Just recently I picked up a Droid and I’ve been taking advantage of the rapidly growing Android marketplace, which recently hit the 20,000 apps mark. Like Apple’s 100,000-strong App Store, there’s a nice mix of paid and free apps, and even though I’m downloading mostly freebies, I’ve started buying more paid apps. Many of them are “upsells” from free apps I downloaded. In most cases, they are just 99 cents. A few examples of paid apps I’ve downloaded or considered buying: Stocks Pro, Mortgage Calc Pro, Currency Guide, Photo Vault, Weather Bug Elite, and Find My Phone. And there are all sorts of games, clocks, calendars, ringtones, heath apps, sports stuff, utilities, and more that are 99 cents or $1.99. Some are more expensive, of course.

Continue reading →

by James Dunstan & Berin Szoka* (PDF)

Originally published in Forbes.com on December 17, 2009

As world leaders meet in Copenhagen to consider drastic carbon emission restrictions that could require large-scale de-industrialization, experts gathered last week just outside Washington, D.C. to discuss another environmental problem: Space junk.[1] Unlike with climate change, there’s no difference of scientific opinion about this problem—orbital debris counts increased 13% in 2009 alone, with the catalog of tracked objects swelling to 20,000, and estimates of over 300,000 objects in total; most too small to see and all racing around the Earth at over 17,500 miles per hour. Those are speeding bullets, some the size of school buses, and all capable of knocking out a satellite or manned vehicle.

At stake are much more than the $200 billion a year satellite and launch industries and jobs that depend on them. Satellites connect the remotest locations in the world; guide us down unfamiliar roads; allow Internet users to view their homes from space; discourage war by making it impossible to hide armies on another country’s borders; are utterly indispensable to American troops in the field; and play a critical role in monitoring climate change and other environmental problems. Orbital debris could block all these benefits for centuries, and prevent us from developing clean energy sources like space solar power satellites, exploring our Solar System and some day making humanity a multi-planetary civilization capable of surviving true climatic catastrophes.

The engineering wizards who have fueled the Information Revolution through the use of satellites as communications and information-gathering tools also overlooked the pollution they were causing. They operated under the “Big Sky” theory: Space is so vast, you don’t have to worry about cleaning up after yourself. They were wrong. Just last February, two satellites collided for the first time, creating over 1,500 new pieces of junk. Many experts believe we are nearing the “tipping point” where these collisions will cascade, making many orbits unusable.

But the problem can be solved. Thus far, governments have simply tried to mandate “mitigation” of debris-creation. But just as some warn about “runaway warming,” we know that mitigation alone will not solve the debris problem. The answer lies in “remediation”: removing just five large objects per year could prevent a chain reaction. If governments attempt to clean up this mess themselves, the cost could run into the trillions—rivaling even some proposed climate change solutions.

Instead, space-faring nations should create an Orbital Debris Removal and Recycling Fund (ODRRF). Continue reading →

walling off” and a “widening universe” are not mutually exclusive. If only policymakers and regulators acknowledged that. They must know it, but admitting it means acknowledging their limited relevance to consumer well-being and a need to step aside. So they feign ignorance.

walling off” and a “widening universe” are not mutually exclusive. If only policymakers and regulators acknowledged that. They must know it, but admitting it means acknowledging their limited relevance to consumer well-being and a need to step aside. So they feign ignorance.

Mashable has reported

Mashable has reported

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.