What works well as an ethical directive might not work equally well as a policy prescription. Stated differently, what one ought to do it certain situations should not always be synonymous with what they must do by force of law.

What works well as an ethical directive might not work equally well as a policy prescription. Stated differently, what one ought to do it certain situations should not always be synonymous with what they must do by force of law.

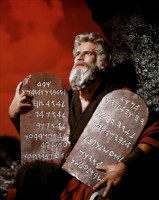

I’m going to relate this lesson to tech policy debates in a moment, but let’s first think of an example of how this lesson applies more generally. Consider the Ten Commandments. Some of them make excellent ethical guidelines (especially the stuff about not coveting neighbor’s house, wife, or possessions). But most of us would agree that, in a free and tolerant society, only two of the Ten Commandments make good law: Thou shalt not kill and Thou shalt not steal.

In other words, not every sin should be a crime. Perhaps some should be; but most should not. Taking this out of the realm of religion and into the world of moral philosophy, we can apply the lesson more generally as: Not every wise ethical principle makes for wise public policy.

Before I get accused of being accused of being some sort of nihilist, I want to be clear that I am absolutely not saying that ethics should never have a bearing on policy. Obviously, all political theory is, at some level, reducible to ethical precepts. My own political philosophy is strongly rooted in the Millian harm principle (“The only purpose for which power can be rightfully exercised over any member of a civilized community, against his will, is to prevent harm to others.”) Not everyone will agree will Mill’s principle, but I would hope most of us could agree that, if we hope to preserve a free and open society, we simply cannot convert every ethical directive into a legal directive or else the scope of human freedom will need to shrink precipitously.

Can We Plan for Every “Bad Butterfly-Effect”?

Anyway, what got me thinking about all this and it its applicability to technology policy was an interesting Wired essay by Patrick Lin entitled, “The Ethics of Saving Lives With Autonomous Cars Are Far Murkier Than You Think.” Lin is the director of the Ethics + Emerging Sciences Group at California Polytechnic State University in San Luis Obispo and lead editor of Robot Ethics (MIT Press, 2012). So, this a man who has obviously done a lot of thinking about the potential ethical challenges presented by the growing ubiquity of robots and autonomous vehicles in society. (His column makes for particularly fun reading if you’ve ever spent time pondering Asimov’s “Laws of Robotics.”)

Lin walks through various hypothetical scenarios regarding the future of autonomous vehicles and discusses the ethical trade-offs at work here. He asks a number of questions about a future of robotic cars and encourages us to give some thoughtful deliberation to the benefits and potential costs of autonomous vehicles. I will not comment here on all the specific issues that lead Lin to question whether they are worth it; instead I want to focus on Lin’s ultimate conclusion.

I commenting on the potential risks and trade-offs, Lin notes:

The introduction of any new technology changes the lives of future people. We know it as the “butterfly effect” or chaos theory: Anything we do could start a chain-reaction of other effects that result in actual harm (or benefit) to some persons somewhere on the planet.

That’s self-evident, of course, but what of it? How should that truism influence tech ethics and/or tech policy? Here are Lin’s thoughts:

For us humans, those effects are impossible to precisely predict, and therefore it is impractical to worry about those effects too much. It would be absurdly paralyzing to follow an ethical principle that we ought to stand down on any action that could have bad butterfly-effects, as any action or inaction could have negative unforeseen and unintended consequences.

But … we can foresee the general disruptive effects of a new technology, especially the nearer-term ones, and we should therefore mitigate them. The butterfly-effect doesn’t release us from the responsibility of anticipating and addressing problems the best we can.

As we rush into our technological future, don’t think of these sorts of issues as roadblocks, but as a sensible yellow light — telling us to look carefully both ways before we cross an ethical intersection.

Lin makes some important points here, but these closing comments (and his article more generally) have a whiff of “precautionary principle” thinking to it that makes me more than a bit uncomfortable. The precautionary principle generally holds that, because a new idea or technology could pose some theoretical danger or risk in the future, public policies should control or limit the development of such innovations until their creators can prove that they won’t cause any harms. Before we walk down that precautionary path, we need to consider the consequences.

The Problem with Precaution

I have spent a great deal of time writing about the dangers of precautionary principle thinking in my recent articles and essays, including my recent law review article, “Technopanics, Threat Inflation, and the Danger of an Information Technology Precautionary Principle,” as well as in two lengthy blog posts asking the questions, “Who Really Believes in ‘Permissionless Innovation’?” and “What Does It Mean to ‘Have a Conversation’ about a New Technology?”

The key point I try to get across in those essays is that letting such precautionary thinking guide policy poses a serious threat to technological progress, economic entrepreneurialism, social adaptation, and long-run prosperity. If public policy is guided at every turn by the precautionary principle, technological innovation is impossible because of fear of the unknown; hypothetical worst-case scenarios trump all other considerations. Social learning and economic opportunities become far less likely, perhaps even impossible, under such a regime. In practical terms, it means fewer services, lower quality goods, higher prices, diminished economic growth, and a decline in the overall standard of living.

In Lin’s essay, we see some precautionary reasoning at work when he argues that “we can foresee the general disruptive effects of a new technology, especially the nearer-term ones, and we should therefore mitigate them” and that we have “responsibility [for] anticipating and addressing problems the best we can.”

To be fair, Lin caveats this by first noting that precise effects are “impossible to predict” and, therefore, that “It would be absurdly paralyzing to follow an ethical principle that we ought to stand down on any action that could have bad butterfly-effects, as any action or inaction could have negative unforeseen and unintended consequences.” Second, as it relates to general effects, he says we should just be “addressing problems the best we can.”

Despite those caveats, I continue to have serious concerns about the potential blurring of ethics and law here. The most obvious question I would have for Lin is: Who is the “we” in this construct? Is it “we” as individuals and institutions interacting throughout society freely and spontaneously, or is it “we” as in the government imposing precautionary thinking through top-down public policy?

I can imagine plenty of scenarios in which a certain amount of precautionary thinking may be entirely appropriate if applied as an informal ethical norm at the individual, household, organizational or even societal level, but which would not be as sensible if applied as a policy prescription. For example, parents should take steps to shield their kids from truly offensive and hateful material on the Internet before they are mature enough to understand the ramifications of it. But that doesn’t mean it would be wise to enshrine the same principle into law in the form of censorship.

Similarly, there are plenty of smart privacy and security norms that organizations should practice that need not be forced on them by law, especially since such mandates would have serious costs if mandated. For example, I think that organizations should feel a strong obligation to safeguard user data and avoid privacy and security screw-ups. I’d like to see more organizations using encryption wherever they can in their systems and also delete unnecessary data whenever possible. But, for a variety of reasons, I do not believe any of these things should be mandated through law or regulation.

Don’t Foreclose Experimentation

While Lin rightly acknowledges the “negative unforeseen and unintended consequences” of preemptive policy action to address precise concerns, he does not unpack the full ramifications of those unseen consequences. Nor does he answer how the royal “we” separate the “precise” from the “general” concerns? (For example, are the specific issues I just raised in the preceding paragraphs “precise” or “general”? What’s the line between the two?)

But I have a bigger concern with Lin’s argument, as well with the field of technology ethics more generally: We rarely hear much discussion of the benefits associated with the ongoing process of trial-and-error experimentation and, more importantly, the benefits of failure and what we learn — both individually and collectively — from the mistakes we inevitably make.

The problem with regulatory systems is that they are permission-based. They focus on preemptive remedies that aim to forecast the future, and future mistakes (i.e., Lin’s “butterfly effects”) in particular. Worse yet, administrative regulation generally preempts or prohibits the beneficial experiments that yield new and better ways of doing things — including what we learn from failed efforts at doing things. But we will never discover better ways of doing things unless the process of evolutionary, experimental change is allowed to continue. We need to keep trying and failing in order to learn how we can move forward. As Samuel Beckett once counseled: “Ever tried. Ever failed. No matter. Try Again. Fail again. Fail better.” Real wisdom is born of experience, especially mistakes we make along the way.

This is why I feel so passionately about drawing a distinction between ethical norms and public policy pronouncements. Law forecloses. It is inflexible. It does not adapt as efficiently or rapidly as social norms do. Ethics and norms provide guidance but also leave plenty of breathing room for ongoing experimentation, and they are refined continuously and in response to ongoing social developments.

It is worth noting that ethics evolve, too. There is a sort of ethical trial-and-error that occurs in society over time as new developments challenge, and then change, old ethical norms. This is another reason we want to be careful about enshrining norms into law.

Thus, policymakers should not be imposing prospective restrictions on new innovations without clear evidence of actual, not merely hypothesized, harm. That’s especially the case since, more often than not, human adapt to new technologies and find creative ways to assimilate even the most disruptive innovations into their lives. We cannot possibly plan for all the “bad butterfly-effects” that might occur, and attempts to do so will result in significant sacrifices in terms of social and economic liberty.

The burden of proof should be on those who advocate preemptive restrictions on technological innovation to show why freedom to tinker and experiment must be foreclosed by policy. There should exist the strongest presumption that the freedom to innovate and experiment will advance human welfare and teach us new and better ways of doing things to overcome most of those “bad butterfly-effects” over time.

So, in closing, let us yield at Lin’s “sensible yellow light — telling us to look carefully both ways before we cross an ethical intersection.” But let us not be cowed into an irrational fear of an unknown and ultimately unknowable future. And let us not be tempted to try to plan for every potential pitfall through preemptive policy prescriptions, lest progress and prosperity get sacrificed as a result of such hubris.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.

The Technology Liberation Front is the tech policy blog dedicated to keeping politicians' hands off the 'net and everything else related to technology.